Basic Numerics¶

This section describes various different numerical considerations and modules of mermaid. Specifically,

- the finite difference module, which is at the core of the discretization of the ordinary (ODE) and partial differential equations (PDE) and operators of mermaid (see Finite differences),

- the Runge-Kutta integrators to integrate the ODEs and PDEs of the registration models (see Runge-Kutta integrators),

- the higher-order spline interpolations, which can be used instead of pytorch’s built-in bilinear and trilinear interpolation. These interpolators are relatively general, but are currently not heavily used or supported by mermaid (see Spline interpolation).

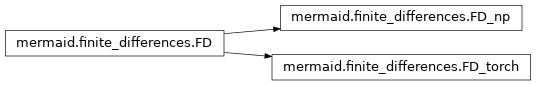

Finite differences¶

This module implements all of mermaid’s finite difference functionality.

finite_difference.py is the main package to compute finite differences in 1D, 2D, and 3D on numpy arrays (class FD_np) and pytorch tensors (class FD_torch). The package supports first and second order derivatives and Neumann and linear extrapolation boundary conditions (though the latter have not been tested extensively yet).

-

class

mermaid.finite_differences.FD(spacing, mode='linear')[source]¶ FD is the abstract class for finite differences. It includes most of the actual finite difference code, but requires the definition (in a derived class) of the methods get_dimension, create_zero_array, and get_size_of_array. In this way the numpy and pytorch versions can easily be derived. All the method expect BxXxYxZ format (i.e., they process a batch at a time)

-

dim= None¶ spatial dimension

-

bcDirichletZero= None¶ should Neumann boundary conditions be used? (otherwise linear extrapolation)

-

spacing= None¶ spacing

-

dXb(I)[source]¶ Backward difference in x direction: \(\frac{dI(i)}{dx}\approx\frac{I_i-I_{i-1}}{h_x}\)

Parameters: I – Input image Returns: Returns the first derivative in x direction using backward differences

-

dXf(I)[source]¶ Forward difference in x direction: \(\frac{dI(i)}{dx}\approx\frac{I_{i+1}-I_{i}}{h_x}\)

Parameters: I – Input image Returns: Returns the first derivative in x direction using forward differences

-

dXc(I)[source]¶ Central difference in x direction: \(\frac{dI(i)}{dx}\approx\frac{I_{i+1}-I_{i-1}}{2h_x}\)

Parameters: I – Input image Returns: Returns the first derivative in x direction using central differences

-

ddXc(I)[source]¶ Second deriative in x direction

Parameters: I – Input image Returns: Returns the second derivative in x direction

-

dYb(I)[source]¶ Same as dXb, but for the y direction

Parameters: I – Input image Returns: Returns the first derivative in y direction using backward differences

-

dYf(I)[source]¶ Same as dXf, but for the y direction

Parameters: I – Input image Returns: Returns the first derivative in y direction using forward differences

-

dYc(I)[source]¶ Same as dXc, but for the y direction

Parameters: I – Input image Returns: Returns the first derivative in y direction using central differences

-

ddYc(I)[source]¶ Same as ddXc, but for the y direction

Parameters: I – Input image Returns: Returns the second derivative in the y direction

-

dZb(I)[source]¶ Same as dXb, but for the z direction

Parameters: I – Input image Returns: Returns the first derivative in the z direction using backward differences

-

dZf(I)[source]¶ Same as dXf, but for the z direction

Parameters: I – Input image Returns: Returns the first derivative in the z direction using forward differences

-

dZc(I)[source]¶ Same as dXc, but for the z direction

Parameters: I – Input image Returns: Returns the first derivative in the z direction using central differences

-

ddZc(I)[source]¶ Same as ddXc, but for the z direction

Parameters: I – Input iamge Returns: Returns the second derivative in the z direction

-

lap(I)[source]¶ IMPORTANT: ALL THE FOLLOWING IMPLEMENTED CODE ADD 1 ON DIMENSION, WHICH REPRESENT BATCH DIMENSION. THIS IS FOR COMPUTATIONAL EFFICIENCY.

Parameters: I – Input image [batch, channel, X,Y,Z] Returns: Returns the Laplacian

-

grad_norm_sqr_c(I)[source]¶ IMPORTANT: ALL THE FOLLOWING IMPLEMENTED CODE ADD 1 ON DIMENSION, WHICH REPRESENT BATCH DIMENSION. THIS IS FOR COMPUTATIONAL EFFICIENCY.

Parameters: I – Input image [batch, channel, X,Y,Z] Returns: returns ||grad I||^2

-

grad_norm_sqr_f(I)[source]¶ IMPORTANT: ALL THE FOLLOWING IMPLEMENTED CODE ADD 1 ON DIMENSION, WHICH REPRESENT BATCH DIMENSION. THIS IS FOR COMPUTATIONAL EFFICIENCY.

Parameters: I – Input image [batch, channel, X,Y,Z] Returns: returns ||grad I||^2

-

grad_norm_sqr_b(I)[source]¶ IMPORTANT: ALL THE FOLLOWING IMPLEMENTED CODE ADD 1 ON DIMENSION, WHICH REPRESENT BATCH DIMENSION. THIS IS FOR COMPUTATIONAL EFFICIENCY.

Parameters: I – Input image [batch, channel, X,Y,Z] Returns: returns ||grad I||^2

-

getdimension(I)[source]¶ Abstract method to return the dimension of an input image I

Parameters: I – Input image Returns: Returns the dimension of the image I

-

create_zero_array(sz)[source]¶ Abstract method to create a zero array of a given size, sz. E.g., sz=[10,2,5]

Parameters: sz – Size array Returns: Returns a zero array of the specified size

-

get_size_of_array(A)[source]¶ Abstract method to return the size of an array (as a vector)

Parameters: A – Input array Returns: Returns its size (e.g., [5,10] or [3,4,6]

-

xp(I, central=False)[source]¶ IMPORTANT: ALL THE FOLLOWING IMPLEMENTED CODE ADD 1 ON DIMENSION, WHICH REPRESENT BATCH DIMENSION. THIS IS FOR COMPUTATIONAL EFFICIENCY.

Returns the values for x-index incremented by one (to the right in 1D)

Parameters: I – Input image [batch, channel, X, Y,Z] Returns: Image with values at an x-index one larger

-

xm(I, central=False)[source]¶ IMPORTANT: ALL THE FOLLOWING IMPLEMENTED CODE ADD 1 ON DIMENSION, WHICH REPRESENT BATCH DIMENSION. THIS IS FOR COMPUTATIONAL EFFICIENCY.

Returns the values for x-index decremented by one (to the left in 1D)

Parameters: I – Input image [batch, channel, X, Y, Z] Returns: Image with values at an x-index one smaller

-

yp(I, central=False)[source]¶ IMPORTANT: ALL THE FOLLOWING IMPLEMENTED CODE ADD 1 ON DIMENSION, WHICH REPRESENT BATCH DIMENSION. THIS IS FOR COMPUTATIONAL EFFICIENCY.

Same as xp, but for the y direction

Parameters: I – Input image Returns: Image with values at y-index one larger

-

ym(I, central=False)[source]¶ Same as xm, but for the y direction

IMPORTANT: ALL THE FOLLOWING IMPLEMENTED CODE ADD 1 ON DIMENSION, WHICH REPRESENT BATCH DIMENSION. THIS IS FOR COMPUTATIONAL EFFICIENCY.

Returns the values for x-index decremented by one (to the left in 1D)

Parameters: I – Input image [batch, channel, X, Y, Z] Returns: Image with values at y-index one smaller

-

zp(I, central=False)[source]¶ Same as xp, but for the z direction

IMPORTANT: ALL THE FOLLOWING IMPLEMENTED CODE ADD 1 ON DIMENSION, WHICH REPRESENT BATCH DIMENSION. THIS IS FOR COMPUTATIONAL EFFICIENCY.

Returns the values for x-index decremented by one (to the left in 1D)

Parameters: I – Input image [batch, channel, X, Y, Z] Returns: Image with values at z-index one larger

-

zm(I, central=False)[source]¶ Same as xm, but for the z direction

IMPORTANT: ALL THE FOLLOWING IMPLEMENTED CODE ADD 1 ON DIMENSION, WHICH REPRESENT BATCH DIMENSION. THIS IS FOR COMPUTATIONAL EFFICIENCY.

Returns the values for x-index decremented by one (to the left in 1D)

Parameters: I – Input image [batch, channel, X, Y, Z] Returns: Image with values at z-index one smaller

-

-

class

mermaid.finite_differences.FD_np(dim, mode='linear')[source]¶ Defnitions of the abstract methods for numpy

-

getdimension(I)[source]¶ Returns the dimension of an image :param I: input image :return: dimension of the input image

-

-

class

mermaid.finite_differences.FD_torch(dim, mode='linear')[source]¶ Defnitions of the abstract methods for torch

-

getdimension(I)[source]¶ Returns the dimension of an image :param I: input image :return: dimension of the input image

-

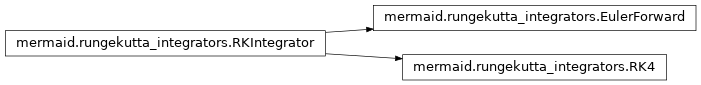

Runge-Kutta integrators¶

Simple (explicit) Runge-Kutta integrators to forward integrate dynamic forward models

-

class

mermaid.rungekutta_integrators.RKIntegrator(f, u, pars, params)[source]¶ Abstract base class for Runge-Kutta integration: x’ = f(x(t),u(t),t)

-

nrOfTimeSteps_perUnitTimeInterval= None¶ number of time steps for the integration

-

f= None¶ Function to be integrated

-

pars= None¶ parameters for the integrator

-

u= None¶ input for integration

-

set_number_of_time_steps(nr)[source]¶ Sets the number of time-steps per unit time-interval the integration

Parameters: nr – number of timesteps per unit time-interval

-

get_number_of_time_steps()[source]¶ Returns the number of time steps per unit time-interval that are used for the integration

Returns: number of time steps per unit time-interval

-

solve(x, fromT, toT, variables_from_optimizer=None)[source]¶ Solves the differential equation.

Parameters: - x – initial condition for state of the equation

- fromT – time to start integration from

- toT – time to end integration

- variables_from_optimizer – allows passing variables from the optimizer (for example an iteration count)

Returns: Returns state, x, at time toT

-

solve_one_step(x, t, dt, variables_from_optimizer=None)[source]¶ Abstract method to be implemented by the different Runge-Kutta methods to advance one step. Both x and the output of f are expected to be lists

Parameters: - x – initial state

- t – initial time

- dt – time increment

- variables_from_optimizer – allows passing variables from the optimizer (for example an iteration count)

Returns: returns the state at t+dt

-

-

class

mermaid.rungekutta_integrators.EulerForward(f, u, pars, params)[source]¶ Euler-forward integration

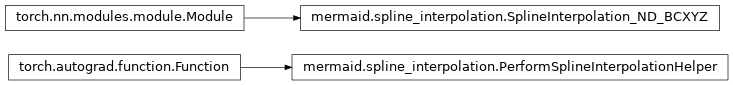

Spline interpolation¶

-

class

mermaid.spline_interpolation.SplineInterpolation_ND_BCXYZ(spacing, spline_order)[source]¶ Spline transform code for nD (1D, 2D, and 3D) spatial spline transforms. Uses the BCXYZ image format. Spline orders 3 to 9 are supported. Only order 3 is currently well tested.

The code is a generalization (and pyTorch-ification) of the 2D spline code by Philippe Thevenaz: http://bigwww.epfl.ch/thevenaz/interpolation/

The main difference is that the code supports 1D, 2D, and 3D images in pyTorch format (i.e., the first two dimensions are the batch size and the number of channels. Furthermore, great care has been taken to avoid loops over pixels to obtain a reasonably high performance interpolation.

-

spacing= None¶ needs to be the spacing of the map at which locations the interpolation should be performed (NOT the spacing of the image from which the coefficient are computed)

Type: spatial spacing; IMPORTANT

-

spline_order= None¶ spline order

-

-

class

mermaid.spline_interpolation.PerformSplineInterpolationHelper(index)[source]¶ Performs spline interpolation, given weights, indices, and coefficients. This is simply a convenience class which avoids computing the gradient of the actual interpolation via automatic differentiation (as this would be very memory intensive).

-

mermaid.spline_interpolation.perform_spline_interpolation_helper(c, weight, index)[source]¶ Helper function to instantiate the spline interpolation helper (for a more efficent gradient computation w/o automatic differentiation)

Parameters: - c – interpolation coefficients

- weight – interpolation weights

- index – interpolation indices

Returns: interpolated signal