Optimizers¶

mermaid currently makes primarily use of SGD with momentum. However, it also supports optimization with lBFGS and some limited support for adam. The optimization code is part of the multiscale optimizer (described here Multiscale optimizer). The customized lBFGS optimizer (to support line-search) is described here: Custom optimizers.

Multiscale optimizer¶

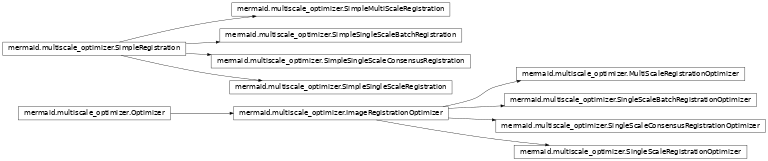

This package enables easy single-scale and multi-scale optimization support.

-

class

mermaid.multiscale_optimizer.SimpleRegistration(ISource, ITarget, spacing, sz, params, compute_inverse_map=False, default_learning_rate=None)[source]¶ Abstract optimizer base class.

-

get_history()[source]¶ Returns the optimization history as a dictionary. Keeps track of energies, iterations counts, and additonal custom measures.

Returns: history dictionary

-

write_parameters_to_settings()[source]¶ Allows currently computed parameters (if they were optimized) to be written back to an output parameter file :return:

-

get_optimizer()[source]¶ Returns the optimizer being used (can be used to customize the simple registration if desired) :return: optimizer

-

get_energy()[source]¶ Returns the current energy :return: Returns a tuple (energy, similarity energy, regularization energy)

-

set_initial_map(map0, initial_inverse_map=None)[source]¶ Sets the initial map for the registrations; by default (w/o setting anything) this will be the identity map, but by setting it to a different initial condition one can concatenate transformations.

Parameters: map0 – Returns: n/a

-

get_initial_map()[source]¶ Returns the initial map; this will typically be the identity map, but can be set to a different initial condition using set_initial_map

Returns: returns the initial map (if applicable)

-

get_initial_inverse_map()[source]¶ Returns the initial inverse map; this will typically be the identity map, but can be set to a different initial condition using set_initial_map

Returns: returns the initial map (if applicable)

-

get_inverse_map()[source]¶ Returns the inverse deformation map if available :return: deformation map

-

-

class

mermaid.multiscale_optimizer.SimpleSingleScaleRegistration(ISource, ITarget, spacing, sz, params, compute_inverse_map=False, default_learning_rate=None)[source]¶ Simple single scale registration

-

class

mermaid.multiscale_optimizer.SimpleSingleScaleConsensusRegistration(ISource, ITarget, spacing, sz, params, compute_inverse_map=False, default_learning_rate=None)[source]¶ Single scale registration making use of consensus optimization (to allow for multiple independent registration that can share parameters).

-

class

mermaid.multiscale_optimizer.SimpleSingleScaleBatchRegistration(ISource, ITarget, spacing, sz, params, compute_inverse_map=False, default_learning_rate=None)[source]¶ Single scale registration making use of batch optimization (to allow optimizing over many or large images).

-

class

mermaid.multiscale_optimizer.SimpleMultiScaleRegistration(ISource, ITarget, spacing, sz, params, compute_inverse_map=False, default_learning_rate=None)[source]¶ Simple multi scale registration

-

class

mermaid.multiscale_optimizer.Optimizer(sz, spacing, useMap, mapLowResFactor, params, compute_inverse_map=False, default_learning_rate=None)[source]¶ Abstract optimizer base class.

-

sz= None¶ image size

-

spacing= None¶ image spacing

-

useMap= None¶ makes use of map

-

mapLowResFactor= None¶ if <1 then evolutions are at a lower resolution, but image is compared at the same resolution; >=1 ignored

-

compute_inverse_map= None¶ If set to True the inverse map is computed on the fly for map-based models

-

default_learning_rate= None¶ If set, this will be the learning rate that the optimizers used (otherwise, as specified in the json configuration, via params)

-

params= None¶ general parameters

-

last_successful_step_size_taken= None¶ propogate step size between multiscale levels

Type: Records the last successful step size an optimizer took (possible use

-

lowResSize= None¶ low res image size

-

lowResSpacing= None¶ low res image spacing

-

rel_ftol= None¶ relative termination tolerance for optimizer

-

spline_order= None¶ order of the spline for interpolations

-

optimizer_has_been_initialized= None¶ Needs to be set before the actual optimization commences; allows to keep track if all parameters have been set and for example to delay external parameter settings

-

write_parameters_to_settings()[source]¶ Writes current state of optimized parameters back to the json setting file (for example to keep track of optimized weights) :return:

-

get_history()[source]¶ Returns the optimization history as a dictionary. Keeps track of energies, iterations counts, and additonal custom measures.

Returns: history dictionary

-

set_last_successful_step_size_taken(lr)[source]¶ Function to let the optimizer know what step size has been successful previously. Useful for example to retain optimization “memory” across scales in a multi-scale implementation :param lr: step size :return: n/a

-

set_rel_ftol(rel_ftol)[source]¶ Sets the relative termination tolerance: \(|f(x_i)-f(x_{i-1})|/f(x_i)<tol\)

Parameters: rel_ftol – relative termination tolerance for optimizer

-

set_model(modelName)[source]¶ Abstract method to select the model which should be optimized by name

Parameters: modelName – name (string) of the model that should be solved

-

get_checkpoint_dict()[source]¶ Returns a dict() object containing the information for the current checkpoint. :return: checpoint dictionary

-

load_checkpoint_dict(d, load_optimizer_state=False)[source]¶ Takes the dictionary from a checkpoint and loads it as the current state of optimizer and model

Parameters: - d – dictionary

- load_optimizer_state – if set to True the optimizer state will be restored

Returns: n/a

-

set_external_optimizer_parameter_loss(opt_parameter_loss)[source]¶ Allows to set an external method as an optimizer parameter loss :param opt_parameter_loss: method which takes shared_model_parameters as its only input :return: returns a scalar value which is the loss

-

get_external_optimizer_parameter_loss()[source]¶ Returns the externally set method for parameter loss. Will be None if none was set. :return: method

-

compute_optimizer_parameter_loss(shared_model_parameters)[source]¶ Returns the optimizer parameter loss. This is the method that should be called to compute this loss. Will either evaluate the method optimizer_parameter_loss or if one was externally defined, the externally defined one will have priority.

Parameters: shared_model_parameters – paramters that have been declared shared in a model Returns: parameter loss

-

optimizer_parameter_loss(shared_model_parameters)[source]¶ This allows to define additional terms for the loss which are based on parameters that are shared between models (for example for the smoother). Can be used to define a form of consensus optimization. :param shared_model_parameters: paramters that have been declared shared in a model :return: 0 by default, otherwise the corresponding penalty

-

-

class

mermaid.multiscale_optimizer.ImageRegistrationOptimizer(sz, spacing, useMap, mapLowResFactor, params, compute_inverse_map=False, default_learning_rate=None)[source]¶ Optimization class for image registration.

-

ISource= None¶ source image

-

lowResISource= None¶ if mapLowResFactor <1, a lowres soure image needs to be created to parameterize some of the registration algorithms

-

lowResITarget= None¶ if mapLowResFactor <1, a lowres target image may need to be created to be used as additonal inputs for registration algorithms

-

ITarget= None¶ target image

-

LSource= None¶ source label

-

LTarget= None¶ target label

-

lowResLSource= None¶ if mapLowResFactor <1, a lowres soure label image needs to be created to parameterize some of the registration algorithms

-

lowResLTarget= None¶ if mapLowResFactor <1, a lowres target label image needs to be created to parameterize some of the registration algorithms

-

initialMap= None¶ initial map

-

initialInverseMap= None¶ initial inverse map

-

weight_map= None¶ initial weight map

-

multi_scale_info_dic= None¶ dicts containing full resolution image and label

-

optimizer_name= None¶ name of the optimizer to use

-

optimizer_params= None¶ parameters that should be passed to the optimizer

-

optimizer= None¶ optimizer object itself (to be instantiated)

-

visualize= None¶ if True figures are created during the run

-

visualize_step= None¶ how often the figures are updated; each self.visualize_step-th iteration

-

nrOfIterations= None¶ the maximum number of iterations for the optimizer

-

current_epoch= None¶ Can be set externally, so the optimizer knows in which epoch we are

-

save_fig= None¶ save fig during the visualization

-

save_fig_path= None¶ the path for saving figures

-

save_fig_num= None¶ the max num of the fig to be saved during one call, set -1 to save all

-

pair_name= None¶ name list of the registration pair

-

iter_count= None¶ count of the iterations over multi-resolution

-

recording_step= None¶ sets the step-size for recording all intermediate results to the history

-

set_visualization(vis)[source]¶ Set if visualization should be on (True) or off (False)

Parameters: vis – visualization status on (True) or off (False)

-

get_visualization()[source]¶ Returns the visualization status

Returns: Returns True if visualizations will be displayed and False otherwise

-

set_visualize_step(nr_step)[source]¶ Set after how many steps a visualization should be updated

Parameters: nr_step –

-

get_visualize_step()[source]¶ Returns after how many steps visualizations are updated

Returns: after how many steps visualizations are updated

-

set_save_fig_path(save_fig_path)[source]¶ the path of saved figures, default is the ../data/expr_name :param save_fig_path: :return:

-

get_save_fig_path()[source]¶ the path of saved figures, default is the ../data/expr_name :param save_fig_path: :return:

-

set_save_fig_num(save_fig_num=-1)[source]¶ set the num of the fig to save :param save_fig_num: :return:

-

register(ISource, ITarget)[source]¶ Registers the source to the target image :param ISource: source image :param ITarget: target image :return: n/a

-

set_source_image(I)[source]¶ Setting the source image which should be deformed to match the target image

Parameters: I – source image

-

set_multi_scale_info(ISource, ITarget, spacing, LSource=None, LTarget=None)[source]¶ provide full resolution of Image and Label

-

set_target_image(I)[source]¶ Setting the target image which the source image should match after registration

Parameters: I – target image

-

set_optimizer_by_name(optimizer_name)[source]¶ Set the desired optimizer by name (only lbfgs and adam are currently supported)

Parameters: optimizer_name – name of the optimizer (string) to be used

-

get_optimizer_by_name()[source]¶ Get the name (string) of the optimizer that was selected

Returns: name (string) of the optimizer

-

set_optimizer(opt)[source]¶ Set the optimizer. Not by name, but instead by passing the optimizer object which should be instantiated

Parameters: opt – optimizer object

-

-

class

mermaid.multiscale_optimizer.SingleScaleRegistrationOptimizer(sz, spacing, useMap, mapLowResFactor, params, compute_inverse_map=False, default_learning_rate=None)[source]¶ Optimizer operating on a single scale. Typically this will be the full image resolution.

Todo

Check what the best way to adapt the tolerances for the pre-defined optimizers; tying it to rel_ftol is not really correct.

-

model= None¶ the model itself

-

criterion= None¶ the loss function

-

initialMap= None¶ initial map, will be needed for map-based solutions; by default this will be the identity map, but can be set to something different externally

-

initialInverseMap= None¶ initial inverse map; will be the same as the initial map, unless it was set externally

-

map0_inverse_external= None¶ initial inverse map, set externally, will be needed for map-based solutions; by default this will be the identity map, but can be set to something different externally

-

map0_external= None¶ intial map, set externally

-

lowResInitialMap= None¶ low res initial map, by default the identity map, will be needed for map-based solutions which are computed at lower resolution

-

lowResInitialInverseMap= None¶ low res initial inverse map, by default the identity map, will be needed for map-based solutions which are computed at lower resolution

-

weight_map= None¶ init_weight map, which only used by metric learning models

-

optimizer_instance= None¶ the optimizer instance to perform the actual optimization

-

weight_clipping_type= None¶ Type of weight clipping; applied to weights and bias indepdenendtly; norm restricted to weight_clipping_value

-

weight_clipping_value= None¶ Desired norm after clipping

-

rec_custom_optimizer_output_string= None¶ the evaluation information

-

write_parameters_to_settings()[source]¶ Writes current state of optimized parameters back to the json setting file (for example to keep track of optimized weights) :return:

-

get_checkpoint_dict()[source]¶ Returns a dict() object containing the information for the current checkpoint. :return: checpoint dictionary

-

load_checkpoint_dict(d, load_optimizer_state=False)[source]¶ Takes the dictionary from a checkpoint and loads it as the current state of optimizer and model

Parameters: - d – dictionary

- load_optimizer_state – if set to True the optimizer state will be restored

Returns: n/a

-

get_energy()[source]¶ Returns the current energy :return: Returns a tuple (energy, similarity energy, regularization energy)

-

set_n_scale(n_scale)[source]¶ the path of saved figures, default is the ../data/expr_name :param save_fig_path: :return:

-

set_model(modelName)[source]¶ Sets the model that should be solved

Parameters: modelName – name of the model that should be solved (string)

-

set_initial_map(map0, map0_inverse=None)[source]¶ Sets the initial map (overwrites the default identity map) :param map0: intial map :param map0_inverse: initial inverse map :return: n/a

-

set_initial_weight_map(weight_map, freeze_weight=False)[source]¶ Sets the initial map (overwrites the default identity map) :param map0: intial map :param map0_inverse: initial inverse map :return: n/a

-

add_similarity_measure(sim_name, sim_measure)[source]¶ Adds a custom similarity measure.

Parameters: - sim_name – name of the similarity measure (string)

- sim_measure – similarity measure itself (class object that can be instantiated)

-

add_model(model_name, model_network_class, model_loss_class, use_map, model_description='custom model')[source]¶ Adds a custom model and its loss function

Parameters: - model_name – name of the model to be added (string)

- model_network_class – registration model itself (class object that can be instantiated)

- model_loss_class – registration loss (class object that can be instantiated)

- use_map – True/False: specifies if model uses a map or not

- model_description – optional model description

-

set_model_state_dict(sd)[source]¶ Sets the state dictionary of the model

Parameters: sd – state dictionary Returns: n/a

-

set_model_parameters(p)[source]¶ Set the parameters of the registration model

Parameters: p – parameters

Set only the shared parameters of the model

Parameters: p – shared registration parameters as an ordered dict Returns: n/a

Returns only the model parameters that are shared between models and the shared buffers associated w/ it.

Returns: shared model parameters and buffers

Returns only the model parameters that are shared between models.

Returns: shared model parameters

-

set_individual_model_parameters(p)[source]¶ Set only the individual parameters of the model

Parameters: p – individual registration parameters as an ordered dict Returns: n/a

-

get_individual_model_parameters()[source]¶ Returns only the model parameters that individual to a model (i.e., not shared).

Returns: individual model parameters

Loads the shared part of a state dictionary :param sd: shared state dictionary :return: n/a

Returns the shared part of a state dictionary :return:

-

upsample_model_parameters(desiredSize)[source]¶ Upsamples the model parameters

Parameters: desiredSize – desired size after upsampling, e.g., [100,20,50] Returns: returns a tuple (upsampled_parameters,upsampled_spacing)

-

downsample_model_parameters(desiredSize)[source]¶ Downsamples the model parameters

Parameters: desiredSize – desired size after downsampling, e.g., [50,50,40] Returns: returns a tuple (downsampled_parameters,downsampled_spacing)

-

set_number_of_iterations(nrIter)[source]¶ Set the number of iterations of the optimizer

Parameters: nrIter – number of iterations

-

get_number_of_iterations()[source]¶ Returns the number of iterations of the solver

Returns: number of set iterations

-

analysis(energy, similarityEnergy, regEnergy, opt_par_energy, phi_or_warped_image, custom_optimizer_output_string='', custom_optimizer_output_values=None, force_visualization=False)[source]¶ print out the and visualize the result :param energy: :param similarityEnergy: :param regEnergy: :param opt_par_energy :param phi_or_warped_image: :return: returns tuple: first entry True if termination tolerance was reached, otherwise returns False; second entry if the image was visualized

Set the individual model parameters and states that may be stored by the optimizer such as the momentum. Expects as input what get_sgd_individual_model_parameters_and_optimizer_states creates as output, but potentially multiple copies of it (as generated by a pyTorch dataloader). I.e., it takes in a dataloader sample. NOTE: currently only supports SGD

Parameters: pars – parameter list as produced by get_sgd_individual_model_parameters_and_optimizer_states Returns: n/a

-

set_sgd_individual_model_parameters_and_optimizer_states(pars)[source]¶ Set the individual model parameters and states that may be stored by the optimizer such as the momentum. Expects as input what get_sgd_individual_model_parameters_and_optimizer_states creates as output, but potentially multiple copies of it (as generated by a pyTorch dataloader). I.e., it takes in a dataloader sample. NOTE: currently only supports SGD

Parameters: pars – parameter list as produced by get_sgd_individual_model_parameters_and_optimizer_states Returns: n/a

Gets the model parameters that are shared.

Returns:

-

-

class

mermaid.multiscale_optimizer.SingleScaleBatchRegistrationOptimizer(sz, spacing, useMap, mapLowResFactor, params, compute_inverse_map=False, default_learning_rate=None)[source]¶ -

batch_size= None¶ how many images per batch

-

shuffle= None¶ shuffle batches between epochshow many images per batch

-

num_workers= None¶ number of workers to read the data

-

nr_of_epochs= None¶ how many iterations for batch; i.e., how often to iterate over the entire dataset = epochs

-

parameter_output_dir= None¶ output directory to store the shared and the individual parameters during the iterations

-

start_from_previously_saved_parameters= None¶ If true then checks if previously saved parameter files exists and load them at the beginning already

-

checkpoint_interval= None¶ after how many epochs checkpoints are saved

-

show_sample_optimizer_output= None¶ Shows iterations for each sample being optimized

-

write_parameters_to_settings()[source]¶ Writes current state of optimized parameters back to the json setting file (for example to keep track of optimized weights) :return:

-

add_similarity_measure(simName, simMeasure)[source]¶ Adds a custom similarity measure

Parameters: - simName – name of the similarity measure (string)

- simMeasure – the similarity measure itself (an object that can be instantiated)

-

set_model(modelName)[source]¶ Sets the model that should be solved

Parameters: modelName – name of the model that should be solved (string)

-

add_model(add_model_name, add_model_networkClass, add_model_lossClass)[source]¶ Adds a custom model to be optimized over

Parameters: - add_model_name – name of the model (string)

- add_model_networkClass – network model itself (as an object that can be instantiated)

- add_model_lossClass – loss of the model (as an object that can be instantiated)

-

get_checkpoint_dict()[source]¶ Returns a dict() object containing the information for the current checkpoint. :return: checpoint dictionary

-

-

class

mermaid.multiscale_optimizer.SingleScaleConsensusRegistrationOptimizer(sz, spacing, useMap, mapLowResFactor, params, compute_inverse_map=False, default_learning_rate=None)[source]¶ -

sigma= None¶ Multiplier for squared augmented Lagrangian penalty

-

nr_of_epochs= None¶ how many iterations for consensus; i.e., how often to iterate over the entire dataset

-

batch_size= None¶ how many images per batch

-

save_intermediate_checkpoints= None¶ when set to True checkpoints are retained for each batch iterations

-

checkpoint_output_directory= None¶ output directory where the checkpoints will be saved

-

save_consensus_state_checkpoints= None¶ saves the current consensus state; typically only the individual states are saved as checkpoints

-

continue_from_last_checkpoint= None¶ allows restarting an optimization by continuing from the last checkpoint

-

load_optimizer_state_from_checkpoint= None¶ If set to False only the state of the model is loaded when resuming from a checkpoint

-

write_parameters_to_settings()[source]¶ Writes current state of optimized parameters back to the json setting file (for example to keep track of optimized weights) :return:

-

add_similarity_measure(simName, simMeasure)[source]¶ Adds a custom similarity measure

Parameters: - simName – name of the similarity measure (string)

- simMeasure – the similarity measure itself (an object that can be instantiated)

-

set_model(modelName)[source]¶ Sets the model that should be solved

Parameters: modelName – name of the model that should be solved (string)

-

add_model(add_model_name, add_model_networkClass, add_model_lossClass)[source]¶ Adds a custom model to be optimized over

Parameters: - add_model_name – name of the model (string)

- add_model_networkClass – network model itself (as an object that can be instantiated)

- add_model_lossClass – loss of the model (as an object that can be instantiated)

-

get_checkpoint_dict()[source]¶ Returns a dict() object containing the information for the current checkpoint. :return: checpoint dictionary

-

load_checkpoint_dict(d, load_optimizer_state=False)[source]¶ Takes the dictionary from a checkpoint and loads it as the current state of optimizer and model

Parameters: - d – dictionary

- load_optimizer_state – if set to True the optimizer state will be restored

Returns: n/a

-

optimize()[source]¶ This optimizer performs consensus optimization:

- (u_i_shared,u_i_individual)^{k+1} = argmin sum_i f_i(u_i_shared,u_i_individual) + sigma/2|u_i_shared-u_consensus^k-z_i^k|^2

- (u_consensus)^{k+1} = 1/nsum_{i=1}^n ((u_i_shared)^{k+1}-z_i^k)

- z_i^{k+1} = z_i^k-((u_i_shared)^{k+1}-u_consensus_{k+1})

Returns: n/a

-

-

class

mermaid.multiscale_optimizer.MultiScaleRegistrationOptimizer(sz, spacing, useMap, mapLowResFactor, params, compute_inverse_map=False, default_learning_rate=None)[source]¶ Class to perform multi-scale optimization. Essentially puts a loop around multiple calls of the single scale optimizer and starts with the registration of downsampled images. When moving up the hierarchy, the registration parameters are upsampled from the solution at the previous lower resolution

-

scaleFactors= None¶ At what image scales optimization should be computed

-

scaleIterations= None¶ number of iterations per scale

-

addSimName= None¶ name of the similarity measure to be added

-

addSimMeasure= None¶ similarity measure itself that should be added

-

add_model_name= None¶ name of the model that should be added

-

add_model_networkClass= None¶ network object of the model to be added

-

add_model_lossClass= None¶ loss object of the model to be added

-

model_name= None¶ name of the model to be added (if specified by name; gets dominated by specifying an optimizer directly

-

ssOpt= None¶ Single scale optimizer

-

write_parameters_to_settings()[source]¶ Writes current state of optimized parameters back to the json setting file (for example to keep track of optimized weights) :return:

-

add_similarity_measure(simName, simMeasure)[source]¶ Adds a custom similarity measure

Parameters: - simName – name of the similarity measure (string)

- simMeasure – the similarity measure itself (an object that can be instantiated)

-

set_model(modelName)[source]¶ Set the model to be optimized over by name

Parameters: modelName – the name of the model (string)

-

set_initial_map(map0, map0_inverse=None)[source]¶ Sets the initial map (overwrites the default identity map) :param map0: intial map :return: n/a

-

set_save_fig_path(save_fig_path)[source]¶ the path of saved figures, default is the ../data/expr_name :param save_fig_path: :return:

-

add_model(add_model_name, add_model_networkClass, add_model_lossClass, use_map)[source]¶ Adds a custom model to be optimized over

Parameters: - add_model_name – name of the model (string)

- add_model_networkClass – network model itself (as an object that can be instantiated)

- add_model_lossClass – loss of the model (as an object that can be instantiated)

- use_map – if set to true, model using a map, otherwise direcly works with the image

-

set_scale_factors(scaleFactors)[source]¶ Set the scale factors for the solution. Should be in decending order, e.g., [1.0, 0.5, 0.25]

Parameters: scaleFactors – scale factors for the multi-scale solution hierarchy

-

set_number_of_iterations_per_scale(scaleIterations)[source]¶ Sets the number of iterations that will be performed per scale of the multi-resolution hierarchy. E.g, [50,100,200]

Parameters: scaleIterations – number of iterations per scale (array)

-

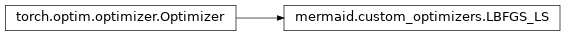

Custom optimizers¶

This package includes an lBFGS optimizer which supports different types of line-search, in particular backtracking, which is important for the image registration implementations. This implementation is available in the torch git repository (but not yet available in the standard release – as of July 2017).

Todo

Add support for multiple parameter groups.

-

class

mermaid.custom_optimizers.LBFGS_LS(params, lr=1, max_iter=20, max_eval=None, tolerance_grad=1e-05, tolerance_change=1e-09, history_size=100, line_search_fn=None, bounds=None)[source]¶ Implements L-BFGS algorithm.

Warning

This optimizer doesn’t support per-parameter options and parameter groups (there can be only one).

Warning

Right now all parameters have to be on a single device. This will be improved in the future.

Note

This is a very memory intensive optimizer (it requires additional

param_bytes * (history_size + 1)bytes). If it doesn’t fit in memory try reducing the history size, or use a different algorithm.Parameters: - lr (float) – learning rate (default: 1)

- max_iter (int) – maximal number of iterations per optimization step (default: 20)

- max_eval (int) – maximal number of function evaluations per optimization step (default: max_iter * 1.25).

- tolerance_grad (float) – termination tolerance on first order optimality (default: 1e-5).

- tolerance_change (float) – termination tolerance on function value/parameter changes (default: 1e-9).

- line_search_fn (str) – line search methods, currently available [‘backtracking’, ‘goldstein’, ‘weak_wolfe’]

- bounds (list of tuples of tensor) – bounds[i][0], bounds[i][1] are elementwise lowerbound and upperbound of param[i], respectively

- history_size (int) – update history size (default: 100).