Utilities and Extensions¶

Contents

Data wrapper¶

-

mermaid.data_wrapper.AdaptVal(x)[source]¶ adapt float32/16, gpu/cpu, float 16 is not recommended to use for it is not stable

-

mermaid.data_wrapper.STNVal(x, ini)[source]¶ the cuda version of stn is writing in float32 so the input would first be converted into float32, the output would be converted to adaptive type

-

mermaid.data_wrapper.FFTVal(x, ini)¶ the cuda version of stn is writing in float32 so the input would first be converted into float32, the output would be converted to adaptive type

Utilities¶

Various utility functions.

Todo

Reorganize this package in a more meaningful way.

-

mermaid.utils.my_hasnan(x)[source]¶ Check if any input elements are NaNs.

Parameters: x – numpy array Returns: True if NaNs are present, False else

-

mermaid.utils.combine_dict(d1, d2)[source]¶ Creates a dictionary which has entries from both of them.

Parameters: - d1 – dictionary 1

- d2 – dictionary 2

Returns: resulting dictionary

-

mermaid.utils.get_parameter_list_from_parameter_dict(pd)[source]¶ Takes a dictionary which contains key value pairs for model parameters and converts it into a list of parameters that can be used as an input to an optimizer.

Parameters: pd – parameter dictionary Returns: list of parameters

-

mermaid.utils.get_parameter_list_and_par_to_name_dict_from_parameter_dict(pd)[source]¶ Same as get_parameter_list_from_parameter_dict; but also returns a dictionary which keeps track of the keys based on memory id.

Parameters: pd – parameter dictionary Returns: tuple of (parameter_list, name_dictionary)

-

mermaid.utils.lift_to_dimension(A, dim)[source]¶ Creates a view of A of dimension dim (by adding dummy dimensions if necessary).

Parameters: - A – numpy array

- dim – desired dimension of view

Returns: returns view of A of appropriate dimension

-

mermaid.utils.get_dim_of_affine_transform(Ab)[source]¶ Returns the number of dimensions corresponding to an affine transformation of the form y=Ax+b stored in a column vector. For A =[a1,a2,a3], the parameter vector is simply [a1;a2;a3;b], i.e., all columns stacked on top of each other.

Parameters: Ab – parameter vector Returns: dimensionality of transform (1,2,or 3)

-

mermaid.utils.set_affine_transform_to_identity(Ab)[source]¶ Sets the affine transformation as given by the column vector Ab to the identity transform.

Parameters: Ab – Affine parameter vector (will be overwritten with the identity transform) Returns:

-

mermaid.utils.set_affine_transform_to_identity_multiN(Ab)[source]¶ Set the affine transforms to the identity (in the case of arbitrary batch size).

Parameters: Ab – Parameter vectors B x pars (batch size x param. vector); will be overwritten with identity trans. Returns:

-

mermaid.utils.get_inverse_affine_param(Ab)[source]¶ Computes inverse of affine transformation.

Formally: C(Ax+b)+d = CAx+Cb+d = x; C = inv(A), d = -Cb

Parameters: Ab – B x pars (batch size x param. vector) Returns: Inverse of affine parameters

-

mermaid.utils.update_affine_param(Ab, Cd)[source]¶ Update affine parameters.

Formally: C(Ax+b)+d = CAx+Cb+d

Parameters: Ab – B x pars (batch size x param. vector) Returns: Updated affine parameters

-

mermaid.utils.apply_affine_transform_to_map(Ab, phi)[source]¶ Applies an affine transform to a map.

Parameters: - Ab – affine transform parameter column vector

- phi – map; format nrCxXxYxZ (nrC corresponds to dimension)

Returns: returns transformed map

-

mermaid.utils.apply_affine_transform_to_map_multiNC(Ab, phi)[source]¶ Applies an affine transform to maps (for arbitrary batch size).

Parameters: - Ab – affine transform parameter column vectors (batch size x param. vector)

- phi – maps; format batchxnrCxXxYxZ (nrC corresponds to dimension)

Returns: returns transformed maps

-

mermaid.utils.compute_normalized_gaussian(X, mu, sig)[source]¶ Computes a normalized Gaussian.

Parameters: - X – map with coordinates at which to evaluate

- mu – array indicating the mean

- sig – array indicating the standard deviations for the different dimensions

Returns: Normalized Gaussian evaluated at coordinates in X

Example:

>>> mu, sig = [1,1], [1,1] >>> X = [0,0] >>> print(compute_normalized_gaussian(X, mu, sig)

-

mermaid.utils.compute_warped_image(I0, phi, spacing, spline_order, zero_boundary=False, use_01_input=True)[source]¶ Warps image.

Parameters: - I0 – image to warp, image size XxYxZ

- phi – map for the warping, size dimxXxYxZ

- spacing – image spacing [dx,dy,dz]

Returns: returns the warped image of size XxYxZ

-

mermaid.utils.compute_warped_image_multiNC(I0, phi, spacing, spline_order, zero_boundary=False, use_01_input=True)[source]¶ Warps image.

Parameters: - I0 – image to warp, image size BxCxXxYxZ

- phi – map for the warping, size BxdimxXxYxZ

- spacing – image spacing [dx,dy,dz]

Returns: returns the warped image of size BxCxXxYxZ

-

mermaid.utils.compute_vector_momentum_from_scalar_momentum_multiNC(lam, I, sz, spacing)[source]¶ Computes the vector momentum from the scalar momentum: \(m=\lambda\nabla I\).

Parameters: - lam – scalar momentum, BxCxXxYxZ

- I – image, BxCxXxYxZ

- sz – size of image

- spacing – spacing of image

Returns: returns the vector momentum

-

mermaid.utils.compute_vector_momentum_from_scalar_momentum_multiN(lam, I, nrOfI, sz, spacing)[source]¶ Computes the vector momentum from the scalar momentum: \(m=\lambda\nabla I\).

Parameters: - lam – scalar momentum, batchxXxYxZ

- I – image, batchXxYxZ

- sz – size of image

- spacing – spacing of image

Returns: returns the vector momentum

-

mermaid.utils.create_ND_vector_field_variable_multiN(sz, nr_of_images=1)[source]¶ Create vector field torch Variable of given size

Parameters: - sz – just the spatial sizes (e.g., [5] in 1D, [5,10] in 2D, [5,10,10] in 3D)

- nrOfI – number of images

Returns: returns vector field of size nrOfIxdimxXxYxZ

-

mermaid.utils.create_ND_vector_field_variable(sz)[source]¶ Create vector field torch Variable of given size.

Parameters: sz – just the spatial sizes (e.g., [5] in 1D, [5,10] in 2D, [5,10,10] in 3D) Returns: returns vector field of size dimxXxYxZ

-

mermaid.utils.create_vector_parameter(nr_of_elements)[source]¶ Creates a vector parameters with a specified number of elements.

Parameters: nr_of_elements – number of vector elements Returns: returns the parameter vector

-

mermaid.utils.create_ND_vector_field_parameter_multiN(sz, nrOfI=1, get_field_from_external_network=False)[source]¶ Create vector field torch Parameter of given size.

Parameters: - sz – just the spatial sizes (e.g., [5] in 1D, [5,10] in 2D, [5,10,10] in 3D)

- nrOfI – number of images

Returns: returns vector field of size nrOfIxdimxXxYxZ

-

mermaid.utils.create_local_filter_weights_parameter_multiN(sz, gaussian_std_weights, nrOfI=1, sched='w_K_w', get_preweight_from_network=False)[source]¶ Create vector field torch Parameter of given size

Parameters: - sz – just the spatial sizes (e.g., [5] in 1D, [5,10] in 2D, [5,10,10] in 3D)

- nrOfI – number of images

Returns: returns vector field of size nrOfIxdimxXxYxZ

-

mermaid.utils.create_ND_scalar_field_parameter_multiNC(sz, nrOfI=1, nrOfC=1)[source]¶ Create vector field torch Parameter of given size

Parameters: - sz – just the spatial sizes (e.g., [5] in 1D, [5,10] in 2D, [5,10,10] in 3D)

- nrOfI – number of images

- nrOfC – number of channels

Returns: returns vector field of size nrOfIxnrOfCxXxYxZ

-

mermaid.utils.centered_identity_map_multiN(sz, spacing, dtype='float32')[source]¶ Create a centered identity map (shifted so it is centered around 0)

Parameters: - sz – size of an image in BxCxXxYxZ format

- spacing – list with spacing information [sx,sy,sz]

- dtype – numpy data-type (‘float32’, ‘float64’, …)

Returns: returns the identity map

-

mermaid.utils.identity_map_multiN(sz, spacing, dtype='float32')[source]¶ Create an identity map

Parameters: - sz – size of an image in BxCxXxYxZ format

- spacing – list with spacing information [sx,sy,sz]

- dtype – numpy data-type (‘float32’, ‘float64’, …)

Returns: returns the identity map

-

mermaid.utils.centered_identity_map(sz, spacing, dtype='float32')[source]¶ Returns a centered identity map (with 0 in the middle) if the sz is odd Otherwise shifts everything by 0.5*spacing

Parameters: - sz – just the spatial dimensions, i.e., XxYxZ

- spacing – list with spacing information [sx,sy,sz]

- dtype – numpy data-type (‘float32’, ‘float64’, …)

Returns: returns the identity map of dimension dimxXxYxZ

-

mermaid.utils.identity_map(sz, spacing, dtype='float32')[source]¶ Returns an identity map.

Parameters: - sz – just the spatial dimensions, i.e., XxYxZ

- spacing – list with spacing information [sx,sy,sz]

- dtype – numpy data-type (‘float32’, ‘float64’, …)

Returns: returns the identity map of dimension dimxXxYxZ

-

mermaid.utils.omt_boundary_weight_mask(img_sz, spacing, mask_range=5, mask_value=5, smoother_std=0.05)[source]¶ generate a smooth weight mask for the omt

-

mermaid.utils.momentum_boundary_weight_mask(img_sz, spacing, mask_range=5, smoother_std=0.05, pow=2)[source]¶ generate a smooth weight mask for the omt

-

mermaid.utils.t2np(v)[source]¶ Takes a torch array and returns it as a numpy array on the cpu

Parameters: v – torch array Returns: numpy array

-

mermaid.utils.cxyz_to_xyzc(v)[source]¶ Takes a torch array and returns it as a numpy array on the cpu

Parameters: v – torch array Returns: numpy array

-

mermaid.utils.space_normal(tensors, std=0.1)[source]¶ space normalize for the net kernel :param tensor: :param mean: :param std: :return:

-

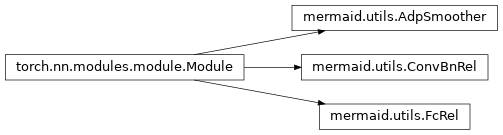

class

mermaid.utils.ConvBnRel(in_channels, out_channels, kernel_size, stride=1, active_unit='relu', same_padding=False, bn=False, reverse=False, bias=False)[source]¶ -

forward(x)[source]¶ Defines the computation performed at every call.

Should be overridden by all subclasses.

Note

Although the recipe for forward pass needs to be defined within this function, one should call the

Moduleinstance afterwards instead of this since the former takes care of running the registered hooks while the latter silently ignores them.

-

-

class

mermaid.utils.FcRel(in_features, out_features, active_unit='relu')[source]¶ -

forward(x)[source]¶ Defines the computation performed at every call.

Should be overridden by all subclasses.

Note

Although the recipe for forward pass needs to be defined within this function, one should call the

Moduleinstance afterwards instead of this since the former takes care of running the registered hooks while the latter silently ignores them.

-

-

class

mermaid.utils.AdpSmoother(inputs, dim, net_sched=None)[source]¶ a simple conv. implementation, generate displacement field

-

get_net_sched(debugging=True, using_bn=True, active_unit='relu', using_sigmoid=False, kernel_size=5)[source]¶

-

forward(m, new_s=None)[source]¶ Defines the computation performed at every call.

Should be overridden by all subclasses.

Note

Although the recipe for forward pass needs to be defined within this function, one should call the

Moduleinstance afterwards instead of this since the former takes care of running the registered hooks while the latter silently ignores them.

-

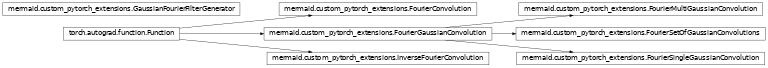

Custom pyTorch extensions¶

This package implements pytorch functions for Fourier-based convolutions. While this may not be relevant for GPU-implementations, convolutions in the spatial domain are slow on CPUs. Hence, this function should be useful for memory-intensive models that need to be run on the CPU or CPU-based computations involving convolutions in general.

Todo

Create a CUDA version of these convolutions functions. There is already a CUDA based FFT implementation available which could be built upon. Alternatively, spatial smoothing may be sufficiently fast on the GPU.

-

mermaid.custom_pytorch_extensions.symmetrize_filter_center_at_zero(filter, renormalize=False)[source]¶ Symmetrizes filter. The assumption is that the filter is already in the format for input to an FFT. I.e., that it has been transformed so that the center of the pixel is at zero.

Parameters: - filter – Input filter (in spatial domain). Will be symmetrized (i.e., will change its value)

- renormalize – (bool) if true will normalize so that the sum is one

Returns: n/a (returns via call by reference)

-

mermaid.custom_pytorch_extensions.are_indices_close(loc)[source]¶ This function takes a set of indices (as produced by np.where) and determines if they are roughly closeby. If not it returns False otherwise True.

Parameters: loc – Index locations as outputted by np.where Returns: Returns if the indices are roughly closeby or not Todo

There should be a better check for closeness of points. The implemented one is very crude.

-

mermaid.custom_pytorch_extensions.create_complex_fourier_filter(spatial_filter, sz, enforceMaxSymmetry=True, maxIndex=None, renormalize=False)[source]¶ Creates a filter in the Fourier domain given a spatial array defining the filter

Parameters: - spatial_filter – Array defining the filter.

- sz – Desired size of the filter in the Fourier domain.

- enforceMaxSymmetry – If set to True (default) forces the filter to be real and hence forces the filter in the spatial domain to be symmetric

- maxIndex – specifies the index of the maximum which will be used to enforceMaxSymmetry. If it is not defined, the maximum is simply computed

- renormalize – (bool) if true, the filter is renormalized to sum to one (useful for Gaussians for example)

Returns: Returns the complex coefficients for the filter in the Fourier domain and the maxIndex

-

mermaid.custom_pytorch_extensions.create_cuda_filter(spatial_filter, sz)[source]¶ create cuda version filter, another one dimension is added to the output for computational convenient besides the output will not be full complex result of shape (∗,2), where ∗ is the shape of input, but instead the last dimension will be halfed as of size ⌊Nd/2⌋+1. :param spatial_filter: N1 x…xNd, no batch dimension, no channel dimension :param sz: [N1,…, Nd] :return: filter, with size [1,N1,..Nd-1,⌊Nd/2⌋+1,2⌋

-

mermaid.custom_pytorch_extensions.sel_fftn(dim)[source]¶ sel the gpu and cpu version of the fft :param dim: :return: function pointer

-

mermaid.custom_pytorch_extensions.sel_ifftn(dim)[source]¶ select the cpu and gpu version of the ifft :param dim: :return: function pointer

-

class

mermaid.custom_pytorch_extensions.FourierConvolution(complex_fourier_filter)[source]¶ pyTorch function to compute convolutions in the Fourier domain: f = g*h

-

ifftn= None¶ The filter in the Fourier domain

-

forward(input)[source]¶ Performs the Fourier-based filtering the 3d cpu fft is not implemented in fftn, to avoid fusing with batch and channel, here 3d is calcuated in loop 1d 2d cpu works well because fft and fft2 is inbuilt, similarly , 1d 2d 3d gpu fft also is inbuilt

in gpu implementation, the rfft is used for efficiency, which means the filter should be symmetric (input_real+input_img)(filter_real+filter_img) = (input_real*filter_real-input_img*filter_img) + (input_img*filter_real+input_real*filter_img)i filter_img =0, then get input_real*filter_real + (input_img*filter_real)i ac + bci

Parameters: input – Image Returns: Filtered-image

-

backward(grad_output)[source]¶ Computes the gradient the 3d cpu ifft is not implemented in ifftn, to avoid fusing with batch and channel, here 3d is calcuated in loop 1d 2d cpu works well because ifft and ifft2 is inbuilt, similarly , 1d 2d 3d gpu fft also is inbuilt

in gpu implementation, the irfft is used for efficiency, which means the filter should be symmetric :param grad_output: Gradient output of previous layer :return: Gradient including the Fourier-based convolution

-

-

class

mermaid.custom_pytorch_extensions.InverseFourierConvolution(complex_fourier_filter)[source]¶ pyTorch function to compute convolutions in the Fourier domain: f = g*h But uses the inverse of the smoothing filter

-

ifftn= None¶ Fourier filter

-

alpha= None¶ Regularizing weight

-

-

mermaid.custom_pytorch_extensions.fourier_convolution(input, complex_fourier_filter)[source]¶ Convenience function for Fourier-based convolutions. Make sure to use this one (instead of directly using the class FourierConvolution). This will assure that each call generates its own instance and hence autograd will work properly

Parameters: - input – Input image

- complex_fourier_filter – Filter in Fourier domain as generated by createComplexFourierFilter

Returns:

-

mermaid.custom_pytorch_extensions.inverse_fourier_convolution(input, complex_fourier_filter)[source]¶

-

class

mermaid.custom_pytorch_extensions.GaussianFourierFilterGenerator(sz, spacing, nr_of_slots=1)[source]¶ -

sz= None¶ image size

-

spacing= None¶ image spacing

-

volumeElement= None¶ volume of pixel/voxel

-

dim= None¶ dimension

-

nr_of_slots= None¶ number of slots to hold Gaussians (to be able to support multi-Gaussian); this is related to storage

-

get_gaussian_xsqr_filters(sigmas)[source]¶ Returns complex Gaussian Fourier filter multiplied with x**2 with standard deviation sigma. Only recomputes the filter if sigma has changed. :param sigmas: standard deviation of the filter as a list :return: Returns the complex Gaussian Fourier filters as a list (in the same order as requested)

-

-

class

mermaid.custom_pytorch_extensions.FourierGaussianConvolution(gaussian_fourier_filter_generator)[source]¶ pyTorch function to compute Gaussian convolutions in the Fourier domain: f = g*h. Also allows to differentiate through the Gaussian standard deviation.

-

class

mermaid.custom_pytorch_extensions.FourierSingleGaussianConvolution(gaussian_fourier_filter_generator, compute_std_gradient)[source]¶ pyTorch function to compute Gaussian convolutions in the Fourier domain: f = g*h. Also allows to differentiate through the Gaussian standard deviation.

-

forward(input, sigma)[source]¶ Performs the Fourier-based filtering the 3d cpu fft is not implemented in fftn, to avoid fusing with batch and channel, here 3d is calcuated in loop 1d 2d cpu works well because fft and fft2 is inbuilt, similarly , 1d 2d 3d gpu fft also is inbuilt

in gpu implementation, the rfft is used for efficiency, which means the filter should be symmetric :param input: Image :return: Filtered-image

-

backward(grad_output)[source]¶ Computes the gradient the 3d cpu ifft is not implemented in ifftn, to avoid fusing with batch and channel, here 3d is calcuated in loop 1d 2d cpu works well because ifft and ifft2 is inbuilt, similarly , 1d 2d 3d gpu fft also is inbuilt

in gpu implementation, the irfft is used for efficiency, which means the filter should be symmetric :param grad_output: Gradient output of previous layer :return: Gradient including the Fourier-based convolution

-

-

mermaid.custom_pytorch_extensions.fourier_single_gaussian_convolution(input, gaussian_fourier_filter_generator, sigma, compute_std_gradient)[source]¶ Convenience function for Fourier-based Gaussian convolutions. Make sure to use this one (instead of directly using the class FourierGaussianConvolution). This will assure that each call generates its own instance and hence autograd will work properly

Parameters: - input – Input image

- gaussian_fourier_filter_generator – generator which will create Gaussian Fourier filter (and caches them)

- sigma – standard deviation for the Gaussian filter

- compute_std_gradient – if set to True computes the gradient otherwise sets it to 0

Returns:

-

class

mermaid.custom_pytorch_extensions.FourierMultiGaussianConvolution(gaussian_fourier_filter_generator, compute_std_gradients, compute_weight_gradients)[source]¶ pyTorch function to compute multi Gaussian convolutions in the Fourier domain: f = g*h. Also allows to differentiate through the Gaussian standard deviation.

-

forward(input, sigmas, weights)[source]¶ Performs the Fourier-based filtering the 3d cpu fft is not implemented in fftn, to avoid fusing with batch and channel, here 3d is calcuated in loop 1d 2d cpu works well because fft and fft2 is inbuilt, similarly , 1d 2d 3d gpu fft also is inbuilt

in gpu implementation, the rfft is used for efficiency, which means the filter should be symmetric :param input: Image :return: Filtered-image

-

backward(grad_output)[source]¶ Computes the gradient the 3d cpu ifft is not implemented in ifftn, to avoid fusing with batch and channel, here 3d is calcuated in loop 1d 2d cpu works well because ifft and ifft2 is inbuilt, similarly , 1d 2d 3d gpu fft also is inbuilt

in gpu implementation, the irfft is used for efficiency, which means the filter should be symmetric :param grad_output: Gradient output of previous layer :return: Gradient including the Fourier-based convolution

-

-

mermaid.custom_pytorch_extensions.fourier_multi_gaussian_convolution(input, gaussian_fourier_filter_generator, sigma, weights, compute_std_gradients=True, compute_weight_gradients=True)[source]¶ Convenience function for Fourier-based multi Gaussian convolutions. Make sure to use this one (instead of directly using the class FourierGaussianConvolution). This will assure that each call generates its own instance and hence autograd will work properly

Parameters: - input – Input image

- gaussian_fourier_filter_generator – generator which will create Gaussian Fourier filter (and caches them)

- sigma – standard deviations for the Gaussian filter (need to be positive)

- weights – weights for the multi-Gaussian kernel (need to sum up to one and need to be positive)

- compute_std_gradients – if set to True computes the gradients with respect to the standard deviation

- compute_weight_gradients – if set to True then gradients for weight are computed, otherwise they are replaced w/ zero

Returns:

-

class

mermaid.custom_pytorch_extensions.FourierSetOfGaussianConvolutions(gaussian_fourier_filter_generator, compute_std_gradients)[source]¶ pyTorch function to compute a set of Gaussian convolutions (as in the multi-Gaussian) in the Fourier domain: f = g*h. Also allows to differentiate through the standard deviations. THe output is not a smoothed field, but the set of all of them. This can then be fed into a subsequent neural network for further processing.

-

forward(input, sigmas)[source]¶ Performs the Fourier-based filtering the 3d cpu fft is not implemented in fftn, to avoid fusing with batch and channel, here 3d is calculated in loop 1d 2d cpu works well because fft and fft2 is inbuilt, similarly , 1d 2d 3d gpu fft also is inbuilt

in gpu implementation, the rfft is used for efficiency, which means the filter should be symmetric :param input: Image :return: Filtered-image

-

backward(grad_output)[source]¶ Computes the gradient the 3d cpu ifft is not implemented in ifftn, to avoid fusing with batch and channel, here 3d is calcuated in loop 1d 2d cpu works well because ifft and ifft2 is inbuilt, similarly , 1d 2d 3d gpu fft also is inbuilt

in gpu implementation, the irfft is used for efficiency, which means the filter should be symmetric :param grad_output: Gradient output of previous layer :return: Gradient including the Fourier-based convolution

-

-

mermaid.custom_pytorch_extensions.fourier_set_of_gaussian_convolutions(input, gaussian_fourier_filter_generator, sigma, compute_std_gradients=False)[source]¶ Convenience function for Fourier-based multi Gaussian convolutions. Make sure to use this one (instead of directly using the class FourierGaussianConvolution). This will assure that each call generates its own instance and hence autograd will work properly

Parameters: - input – Input image

- gaussian_fourier_filter_generator – generator which will create Gaussian Fourier filter (and caches them)

- sigma – standard deviations for the Gaussian filter (need to be positive)

- compute_weight_std_gradients – if set to True then gradients for standard deviation are computed, otherwise they are replaced w/ zero

Returns:

-

mermaid.custom_pytorch_extensions.check_fourier_conv()[source]¶ Convenience function to check the gradient. Fails, as pytorch’s check appears to have difficulty

Returns: True if analytical and numerical gradient are the same Todo

The current check seems to fail in pyTorch. However, the gradient appears to be correct. Potentially an issue with the numerical gradient approximiaton.

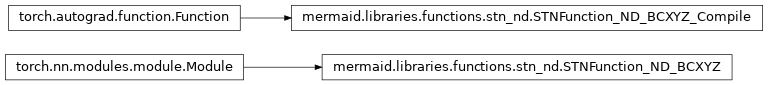

Spatial transforms¶

Spatial transform functions in 1D, 2D, and 3D.

Todo

Add CUDA implementation. Could be based of the existing 2D CUDA implementation.

-

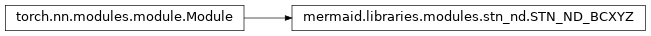

class

mermaid.libraries.functions.stn_nd.STNFunction_ND_BCXYZ(spacing, zero_boundary=False, using_bilinear=True, using_01_input=True)[source]¶ Spatial transform function for 1D, 2D, and 3D. In BCXYZ format (this IS the format used in the current toolbox).

-

class

mermaid.libraries.functions.stn_nd.STNFunction_ND_BCXYZ_Compile(spacing, zero_boundary=True)[source]¶ - Spatial transform function for 1D, 2D, and 3D. In BCXYZ format (this IS the format used in the current toolbox).

- TODO, the boundary issue is still there and would be triggered at 1, so it would cause the boundary a little bit shrink, this can be solved by adding more strick judgement when boundary is 1, it would inflence a lot at low-resolution case, and will influence the high resolution case by upsampling the map currently we put it aside

-

backward_stn(input1, input2, grad_input1, grad_input2, grad_output, ndim, device_c, use_cuda=False, zero_boundary=True)[source]¶

This package implements spatial transformations in 1D, 2D, and 3D. This is needed for the map-based registrations for example.

Todo

Implement CUDA version. There is already a 2D CUDA version available (in the source directory here). But it needs to be extended to 1D and 3D. We also make use of a different convention for images which needs to be accounted for, as we use the BxCxXxYxZ image format and BxdimxXxYxZ for the maps.