Registration models¶

This section describes the most important registration modules of mermaid. These

- generate a registration model (see Model factory),

- specify dynamic forward models, i.e., what gets integrated (see Forward models),

- allow evaluation of the forward models, e.g., when parameters are already given, or when integrating into deep learning models (see Model evaluation)

- implement the different regisration models (see Registration networks)

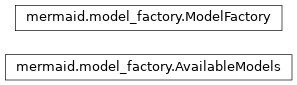

Model factory¶

The model factory provides convenience functionality to instantiate different registration models.

Package to quickly instantiate registration models by name.

-

class

mermaid.model_factory.AvailableModels[source]¶ -

models= None¶ dictionary defining all the models

-

-

class

mermaid.model_factory.ModelFactory(sz_sim, spacing_sim, sz_model, spacing_model)[source]¶ Factory class to instantiate registration models.

-

sz_sim= None¶ size of the image (BxCxXxYxZ) format as used in the similarity measure part of the loss function

-

spacing_sim= None¶ spatial spacing as used in the similarity measure of the loss function

-

sz_model= None¶ size of the parameters (BxCxXxYxZ) as used in the model itself (and possibly in the loss function for regularization)

-

spacing_model= None¶ spatial spacing as used in the model itself (and possibly in the loss function for regularization)

-

dim= None¶ spatial dimension

-

get_models()[source]¶ Returns all available models as a dictionary which has as keys the model name and tuple entries of the form (networkclass,lossclass,usesMap,explanation_string) :return: the model dictionary

-

add_model(modelName, networkClass, lossClass, useMap, modelDescription='custom model')[source]¶ Allows to quickly add a new model.

Parameters: - modelName – name for the model

- networkClass – network class defining the model

- lossClass – loss class being used by ty the model

-

print_available_models()[source]¶ Prints the models that are available and can be created with create_registration_model

-

create_registration_model(modelName, params, compute_inverse_map=False)[source]¶ Performs the actual model creation including the loss function

Parameters: - modelName – Name of the model to be created

- params – parameter dictionary of type

ParameterDict - compute_inverse_map – for a map-based model if this is turned on then the inverse map is computed on the fly

Returns: a two-tuple: model, loss

-

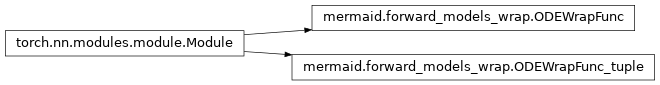

Forward models¶

The forward models are implementations of the dynamic equations for the registration models.

-

class

mermaid.forward_models_wrap.ODEWrapFunc(nested_class, has_combined_input=False, pars=None, variables_from_optimizer=None, extra_var=None, dim_info=None)[source]¶ a wrap on tensor based torchdiffeq input

-

nested_class= None¶ the model to be integrated

-

pars= None¶ ParameterDict, settings passed to integrator

-

variables_from_optimizer= None¶ allows passing variables (as a dict from the optimizer; e.g., the current iteration)

-

extra_var= None¶ extra variable

-

has_combined_input= None¶ the model has combined input in x e.g. EPDiff* equation, otherwise, model has individual input e.g. advect* , has x,u two inputs

-

dim_info= None¶ the input x can be a tensor concatenated by several variables along channel, dim_info is a list indicates the dim of each variable

-

forward(t, y)[source]¶ Defines the computation performed at every call.

Should be overridden by all subclasses.

Note

Although the recipe for forward pass needs to be defined within this function, one should call the

Moduleinstance afterwards instead of this since the former takes care of running the registered hooks while the latter silently ignores them.

-

-

class

mermaid.forward_models_wrap.ODEWrapFunc_tuple(nested_class, has_combined_input=False, pars=None, variables_from_optimizer=None, extra_var=None, dim_info=None)[source]¶ a warp on tuple based torchdiffeq input

-

nested_class= None¶ the model to be integrated

-

pars= None¶ ParameterDict, settings passed to integrator

-

variables_from_optimizer= None¶ allows passing variables (as a dict from the optimizer; e.g., the current iteration)

-

extra_var= None¶ extra variable

-

has_combined_input= None¶ the model has combined input in x e.g. EPDiff* equation, otherwise, model has individual input e.g. advect* , has x,u two inputs

-

dim_info= None¶ not use in tuple version

-

forward(t, y)[source]¶ Defines the computation performed at every call.

Should be overridden by all subclasses.

Note

Although the recipe for forward pass needs to be defined within this function, one should call the

Moduleinstance afterwards instead of this since the former takes care of running the registered hooks while the latter silently ignores them.

-

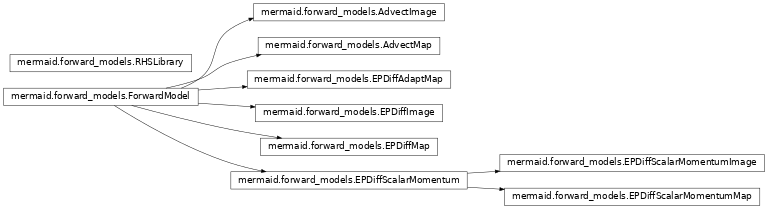

Package defining various dynamic forward models as well as convenience methods to generate the right hand sides (RHS) of the related partial differential equations.

- Currently, the following forward models are implemented:

- An advection equation for images

- An advection equation for maps

- The EPDiff-equation parameterized using the vector-valued momentum for images

- The EPDiff-equation parameterized using the vector-valued momentum for maps

- The EPDiff-equation parameterized using the scalar-valued momentum for images

- The EPDiff-equation parameterized using the scalar-valued momentum for maps

The images are expected to be tensors of dimension: BxCxXxYxZ (or BxCxX in 1D and BxCxXxY in 2D), where B is the batch-size, C the number of channels, and X, Y, and Z are the spatial coordinate indices.

- Futhermore the following (RHSs) are provided

- Image advection

- Map advection

- Scalar conservation law

- EPDiff

-

class

mermaid.forward_models.RHSLibrary(spacing, use_neumann_BC_for_map=False)[source]¶ Convenience class to quickly generate various right hand sides (RHSs) of popular partial differential equations. In this way new forward models can be written with minimal code duplication.

-

spacing= None¶ spatial spacing

-

spacing_min= None¶ min of the spacing

-

fdt_ne= None¶ torch finite differencing support neumann zero

-

fdt_le= None¶ torch finite differencing support linear extrapolation

-

fdt_di= None¶ torch finite differencing support dirichlet zero

-

dim= None¶ spatial dimension

-

use_neumann_BC_for_map= None¶ If True uses zero Neumann boundary conditions also for evolutions of the map, if False uses linear extrapolation

-

rhs_advect_image_multiNC(I, v)[source]¶ Advects a batch of images which can be multi-channel. Expected image format here, is BxCxXxYxZ, where B is the number of images (batch size), C, the number of channels per image and X, Y, Z are the spatial coordinates (X only in 1D; X,Y only in 2D)

\(-\nabla I^Tv\)

Parameters: - I – Image batch BxCIxXxYxZ

- v – Velocity fields (this will be one velocity field per image) BxCxXxYxZ

Returns: Returns the RHS of the advection equations involved BxCxXxYxZ

-

rhs_scalar_conservation_multiNC(I, v)[source]¶ Scalar conservation law for a batch of images which can be multi-channel. Expected image format here, is BxCxXxYxZ, where B is the number of images (batch size), C, the number of channels per image and X, Y, Z are the spatial coordinates (X only in 1D; X,Y only in 2D)

\(-div(Iv)\)

Parameters: - I – Image batch BxCIxXxYxZ

- v – Velocity fields (this will be one velocity field per image) BxCxXxYxZ

Returns: Returns the RHS of the scalar conservation law equations involved BxCxXxYxZ

-

rhs_lagrangian_evolve_map_multiNC(phi, v)[source]¶ Evolves a set of N maps (for N images). Expected format here, is BxCxXxYxZ, where B is the number of images/maps (batch size), C, the number of channels per (here the spatial dimension for the map coordinate functions), and X, Y, Z are the spatial coordinates (X only in 1D; X,Y only in 2D). This is used to evolve the map going from source to target image. Requires interpolation so should if at all possible not be used as part of an optimization. the idea of compute inverse map is due to the map is defined in the source space, referring to point move to where,(compared with the target space, refers to where it comes from) in this situation, we only need to capture the velocity at that place and accumulate along the time step since advecton function is moves the image (or phi based image) by v step, which means v is shared by different coordinate, so it is safe to compute in this way.

\(v\circ\phi\)

Parameters: - phi – map batch BxCxXxYxZ

- v – Velocity fields (this will be one velocity field per map) BxCxXxYxZ

- phi –

- v –

Returns: Returns the RHS of the evolution equations involved BxCxXxYxZ

Returns:

-

rhs_advect_map_multiNC(phi, v)[source]¶ Advects a set of N maps (for N images). Expected format here, is BxCxXxYxZ, where B is the number of images/maps (batch size), C, the number of channels per (here the spatial dimension for the map coordinate functions), and X, Y, Z are the spatial coordinates (X only in 1D; X,Y only in 2D)

\(-D\phi v\)

Parameters: - phi – map batch BxCxXxYxZ

- v – Velocity fields (this will be one velocity field per map) BxCxXxYxZ

Returns: Returns the RHS of the advection equations involved BxCxXxYxZ

-

rhs_epdiff_multiNC(m, v)[source]¶ Computes the right hand side of the EPDiff equation for of N momenta (for N images). Expected format here, is BxCxXxYxZ, where B is the number of momenta (batch size), C, the number of channels per (here the spatial dimension for the momenta), and X, Y, Z are the spatial coordinates (X only in 1D; X,Y only in 2D)

a new version, where batch is no longer calculated separately

\(-(div(m_1v),...,div(m_dv))^T-(Dv)^Tm\)

Parameters: - m – momenta batch BxCXxYxZ

- v – Velocity fields (this will be one velocity field per momentum) BxCXxYxZ

Returns: Returns the RHS of the EPDiff equations involved BxCXxYxZ

-

rhs_adapt_epdiff_wkw_multiNC(m, v, w, sm_wm, smoother)[source]¶ Computes the right hand side of the EPDiff equation for of N momenta (for N images). Expected format here, is BxCxXxYxZ, where B is the number of momenta (batch size), C, the number of channels per (here the spatial dimension for the momenta), and X, Y, Z are the spatial coordinates (X only in 1D; X,Y only in 2D)

a new version, where batch is no longer calculated separately

\(-(div(m_1v),...,div(m_dv))^T-(Dv)^Tm\)

Parameters: - m – momenta batch BxCXxYxZ

- v – Velocity fields (this will be one velocity field per momentum) BxCXxYxZ

Returns: Returns the RHS of the EPDiff equations involved BxCXxYxZ

-

-

class

mermaid.forward_models.ForwardModel(sz, spacing, params=None)[source]¶ Abstract forward model class. Should never be instantiated. Derived classes require the definition of f(self,t,x,u,pars) and u(self,t,pars). These functions will be used for integration: x’(t) = f(t,x(t),u(t))

-

dim= None¶ spatial dimension

-

spacing= None¶ spatial spacing

-

sz= None¶ image size (BxCxXxYxZ)

-

params= None¶ ParameterDict instance holding parameters

-

rhs= None¶ rhs library support

-

f(t, x, u, pars, variables_from_optimizer=None)[source]¶ Function to be integrated

Parameters: - t – time

- x – state

- u – input

- pars – optional parameters

- variables_from_optimizer – variables that can be passed from the optimizer

Returns: the function value, should return a list (to support easy concatenations of states)

-

-

class

mermaid.forward_models.AdvectMap(sz, spacing, params=None, compute_inverse_map=False)[source]¶ Forward model to advect an n-D map using a transport equation: \(\Phi_t + D\Phi v = 0\). v is treated as an external argument and Phi is the state

-

compute_inverse_map= None¶ If True then computes the inverse map on the fly for a map-based solution

-

u(t, pars, variables_from_optimizer=None)[source]¶ External input, to hold the velocity field

Parameters: - t – time (ignored; not time-dependent)

- pars – assumes an n-D velocity field is passed as the only input argument

- variables_from_optimizer – variables that can be passed from the optimizer

Returns: Simply returns this velocity field

-

f(t, x, u, pars=None, variables_from_optimizer=None)[source]¶ Function to be integrated, i.e., right hand side of transport equation:

\(-D\phi v\)

Parameters: - t – time (ignored; not time-dependent)

- x – state, here the map, Phi, itself (assumes 3D-5D array; [nrI,0,:,:] x-coors; [nrI,1,:,:] y-coors; …

- u – external input, will be the velocity field here

- pars – ignored (does not expect any additional inputs)

- variables_from_optimizer – variables that can be passed from the optimizer

Returns: right hand side [phi]

-

-

class

mermaid.forward_models.AdvectImage(sz, spacing, params=None)[source]¶ Forward model to advect an image using a transport equation: \(I_t + \nabla I^Tv = 0\). v is treated as an external argument and I is the state

-

u(t, pars, variables_from_optimizer=None)[source]¶ External input, to hold the velocity field

Parameters: - t – time (ignored; not time-dependent)

- pars – assumes an n-D velocity field is passed as the only input argument

- variables_from_optimizer – variables that can be passed from the optimizer

Returns: Simply returns this velocity field

-

f(t, x, u, pars=None, variables_from_optimizer=None)[source]¶ Function to be integrated, i.e., right hand side of transport equation: \(-\nabla I^T v\)

Parameters: - t – time (ignored; not time-dependent)

- x – state, here the image, I, itself (supports multiple images and channels)

- u – external input, will be the velocity field here

- pars – ignored (does not expect any additional inputs)

- variables_from_optimizer – variables that can be passed from the optimizer

Returns: right hand side [I]

-

-

class

mermaid.forward_models.EPDiffImage(sz, spacing, smoother, params=None)[source]¶ Forward model for the EPdiff equation. State is the momentum, m, and the image I: \((m_1,...,m_d)^T_t = -(div(m_1v),...,div(m_dv))^T-(Dv)^Tm\)

\(v=Km\)

\(I_t+\nabla I^Tv=0\)

-

f(t, x, u, pars=None, variables_from_optimizer=None)[source]¶ Function to be integrated, i.e., right hand side of the EPDiff equation: \(-(div(m_1v),...,div(m_dv))^T-(Dv)^Tm\)

\(-\nabla I^Tv\)

Parameters: - t – time (ignored; not time-dependent)

- x – state, here the vector momentum, m, and the image, I

- u – ignored, no external input

- pars – ignored (does not expect any additional inputs)

- variables_from_optimizer – variables that can be passed from the optimizer

Returns: right hand side [m,I]

-

-

class

mermaid.forward_models.EPDiffMap(sz, spacing, smoother, params=None, compute_inverse_map=False)[source]¶ Forward model for the EPDiff equation. State is the momentum, m, and the transform, \(\phi\) (mapping the source image to the target image).

\((m_1,...,m_d)^T_t = -(div(m_1v),...,div(m_dv))^T-(Dv)^Tm\)

\(v=Km\)

\(\phi_t+D\phi v=0\)

-

compute_inverse_map= None¶ If True then computes the inverse map on the fly for a map-based solution

-

f(t, x, u, pars=None, variables_from_optimizer=None)[source]¶ Function to be integrated, i.e., right hand side of the EPDiff equation: :math:`-(div(m_1v),…,div(m_dv))^T-(Dv)^Tm’

\(-D\phi v\)

Parameters: - t – time (ignored; not time-dependent)

- x – state, here the image, vector momentum, m, and the map, \(\phi\)

- u – ignored, no external input

- pars – ignored (does not expect any additional inputs)

- variables_from_optimizer – variables that can be passed from the optimizer

Returns: right hand side [m,phi]

-

-

class

mermaid.forward_models.EPDiffAdaptMap(sz, spacing, smoother, params=None, compute_inverse_map=False, update_sm_by_advect=True, update_sm_with_interpolation=True, compute_on_initial_map=True)[source]¶ Forward model for the EPDiff equation. State is the momentum, m, and the transform, \(\phi\) (mapping the source image to the target image).

\((m_1,...,m_d)^T_t = -(div(m_1v),...,div(m_dv))^T-(Dv)^Tm\)

\(v=Km\)

\(\phi_t+D\phi v=0\)

-

compute_inverse_map= None¶ If True then computes the inverse map on the fly for a map-based solution

-

embedded_smoother= None¶ if only take the first step penalty as the total penalty, otherwise accumluate the penalty

-

f(t, x, u, pars=None, variables_from_optimizer=None)[source]¶ Function to be integrated, i.e., right hand side of the EPDiff equation: :math:`-(div(m_1v),…,div(m_dv))^T-(Dv)^Tm’

\(-D\phi v\)

Parameters: - t – time (ignored; not time-dependent)

- x – state, here the image, vector momentum, m, and the map, \(\phi\)

- u – ignored, no external input

- pars – ignored (does not expect any additional inputs)

- variables_from_optimizer – variables that can be passed from the optimizer

Returns: right hand side [m,phi]

-

-

class

mermaid.forward_models.EPDiffScalarMomentum(sz, spacing, smoother, params)[source]¶ Base class for scalar momentum EPDiff solutions. Defines a smoother that can be commonly used.

-

class

mermaid.forward_models.EPDiffScalarMomentumImage(sz, spacing, smoother, params=None)[source]¶ Forward model for the scalar momentum EPdiff equation. State is the scalar momentum, lam, and the image I \((m_1,...,m_d)^T_t = -(div(m_1v),...,div(m_dv))^T-(Dv)^Tm\)

\(v=Km\)

:math:’m=lambdanabla I`

\(I_t+\nabla I^Tv=0\)

\(\lambda_t + div(\lambda v)=0\)

-

f(t, x, u, pars=None, variables_from_optimizer=None)[source]¶ Function to be integrated, i.e., right hand side of the EPDiff equation:

\(-(div(m_1v),...,div(m_dv))^T-(Dv)^Tm\)

\(-\nabla I^Tv\)

Math: -div(lambda v)

Parameters: - t – time (ignored; not time-dependent)

- x – state, here the scalar momentum, lam, and the image, I, itself

- u – no external input

- pars – ignored (does not expect any additional inputs)

- variables_from_optimizer – variables that can be passed from the optimizer

Returns: right hand side [lam,I]

-

-

class

mermaid.forward_models.EPDiffScalarMomentumMap(sz, spacing, smoother, params=None, compute_inverse_map=False)[source]¶ Forward model for the scalar momentum EPDiff equation. State is the scalar momentum, lam, the image, I, and the transform, phi. \((m_1,...,m_d)^T_t = -(div(m_1v),...,div(m_dv))^T-(Dv)^Tm\)

\(v=Km\)

\(m=\lambda\nabla I\)

\(I_t+\nabla I^Tv=0\)

\(\lambda_t + div(\lambda v)=0\)

\(\Phi_t+D\Phi v=0\)

-

compute_inverse_map= None¶ If True then computes the inverse map on the fly for a map-based solution

-

f(t, x, u, pars=None, variables_from_optimizer=None)[source]¶ Function to be integrated, i.e., right hand side of the EPDiff equation:

\(-(div(m_1v),...,div(m_dv))^T-(Dv)^Tm\)

\(-\nabla I^Tv\)

\(-div(\lambda v)\)

\(-D\Phi v\)

Parameters: - t – time (ignored; not time-dependent)

- x – state, here the scalar momentum, lam, the image, I, and the transform, \(\phi\)

- u – ignored, no external input

- pars – ignored (does not expect any additional inputs)

- variables_from_optimizer – variables that can be passed from the optimizer

Returns: right hand side [lam,I,phi]

-

Model evaluation¶

Given registration parameters the model evaluation module allows to evaluate a given model. That is, it performs setup and integration of the forward models. This can also be used to easily combine the forward models with deep learning approaches.

-

mermaid.model_evaluation.evaluate_model(ISource_in, ITarget_in, sz, spacing, model_name=None, use_map=None, compute_inverse_map=False, map_low_res_factor=None, compute_similarity_measure_at_low_res=None, spline_order=None, individual_parameters=None, shared_parameters=None, params=None, extra_info=None, visualize=True, visual_param=None, given_weight=False)[source]¶ #todo: Support initial maps which are not identity

Parameters: - ISource_in – source image (BxCxXxYxZ format)

- ITarget_in – target image (BxCxXxYxZ format)

- sz – size of the images (BxCxXxYxZ format)

- spacing – spacing for the images

- model_name – name of the desired model (string)

- use_map – if set to True then map-based mode is used

- compute_inverse_map – if set to True the inverse map will be computed

- map_low_res_factor – if set to None then computations will be at full resolution, otherwise at a fraction of the resolution

- compute_similarity_measure_at_low_res –

- spline_order – desired spline order for the sampler

- individual_parameters – individual registration parameters

- shared_parameters – shared registration parameters

- params – parameter dictionary (of model_dictionary type) which configures the model

- visualize – if set to True results will be visualized

Returns: returns a tuple (I_warped,phi,phi_inverse,model_dictionary), here I_warped = I_sourcecircphi, and phi_inverse is the inverse of phi; model_dictionary contains various intermediate results

-

mermaid.model_evaluation.evaluate_model_low_level_interface(model, I_source, opt_variables=None, use_map=False, initial_map=None, compute_inverse_map=False, initial_inverse_map=None, map_low_res_factor=None, sampler=None, low_res_spacing=None, spline_order=1, low_res_I_source=None, low_res_initial_map=None, low_res_initial_inverse_map=None, compute_similarity_measure_at_low_res=False)[source]¶ Evaluates a registration model. Core functionality for optimizer. Use evaluate_model for a convenience implementation which recomputes settings on the fly

Parameters: - model – registration model

- I_source – source image (may not be used for map-based approaches)

- opt_variables – dictionary to be passed to an optimizer or here the evaluation routine (e.g., {‘iter’: self.iter_count,’epoch’: self.current_epoch})

- use_map – if set to True then map-based mode is used

- initial_map – initial full-resolution map (will in most cases be the identity)

- compute_inverse_map – if set to True the inverse map will be computed

- initial_inverse_map – initial inverse map (will in most cases be the identity)

- map_low_res_factor – if set to None then computations will be at full resolution, otherwise at a fraction of the resolution

- sampler – sampler which takes care of upsampling maps from their low-resolution variants (when map_low_res_factor<1.)

- low_res_spacing – spacing of the low res map/image

- spline_order – desired spline order for the sampler

- low_res_I_source – low resolution source image

- low_res_initial_map – low resolution version of the initial map

- low_res_initial_inverse_map – low resolution version of the initial inverse map

- compute_similarity_measure_at_low_res – if set to True the similarity measure is also evaluated at low resolution (otherwise at full resolution)

Returns: returns a tuple (I_warped,phi,phi_inverse), here I_warped = I_sourcecircphi, and phi_inverse is the inverse of phi

IMPORTANT: note that phi is the map that maps from source to target image; I_warped is None for map_based solution, phi is None for image-based solution; phi_inverse is None if inverse is not computed

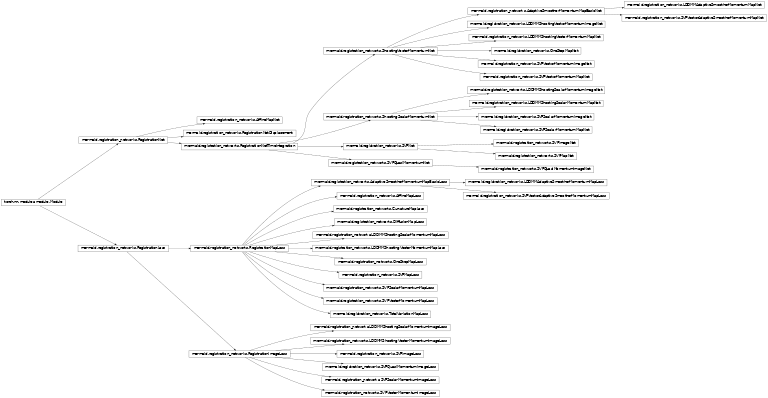

Registration networks¶

The registration network module implements the different registration algorithms.

Defines different registration methods as pyTorch networks. Currently implemented:

- SVFImageNet: image-based stationary velocity field

- SVFMapNet: map-based stationary velocity field

- SVFQuasiMomentumImageNet: EXPERIMENTAL (not working yet): SVF which is parameterized by a momentum

- SVFScalarMomentumImageNet: image-based SVF using the scalar-momentum parameterization

- SVFScalarMomentumMapNet: map-based SVF using the scalar-momentum parameterization

- SVFVectorMomentumImageNet: image-based SVF using the vector-momentum parameterization

- SVFVectorMomentumMapNet: map-based SVF using the vector-momentum parameterization

- CVFVectorMomentumMapNet: map-based CVF using the vector-momentum parameterization

- LDDMMShootingVectorMomentumImageNet: image-based LDDMM using the vector-momentum parameterization

- LDDMMShootingVectorMomentumMapNet: map-based LDDMM using the vector-momentum parameterization

- LDDMMShootingScalarMomentumImageNet: image-based LDDMM using the scalar-momentum parameterization

- LDDMMShootingScalarMomentumMapNet: map-based LDDMM using the scalar-momentum parameterization

-

class

mermaid.registration_networks.RegistrationNet(sz, spacing, params)[source]¶ Abstract base-class for all the registration networks

-

sz= None¶ image size

-

spacing= None¶ image spacing

-

params= None¶ ParameterDict() object for the parameters

-

nrOfImages= None¶ the number of images, i.e., the batch size B

-

nrOfChannels= None¶ the number of image channels, i.e., C

-

dim= None¶ dimension of the image

-

set_dictionary_to_pass_to_integrator(d)[source]¶ The values will be transfered to the default dictionary (shallow is fine).

Parameters: d – dictionary to pass to integrator Returns: dictionary

-

get_variables_to_transfer_to_loss_function()[source]¶ This is a function that can be overwritten by models to allow to return variables which are also needed for the computation of the loss function. Returns None by default, but can for example be used to pass parameters or smoothers which are needed for the model itself and its loss. By convention these variables should be returned as a dictionary.

Returns:

-

get_custom_optimizer_output_string()[source]¶ Can be overwritten by a method to allow for additional optimizer output (on top of the energy values)

Returns:

-

get_custom_optimizer_output_values()[source]¶ Can be overwritten by a method to allow for additional optimizer history output (should in most cases go hand-in-hand with the string returned by get_custom_optimizer_output_string()

Returns:

-

create_registration_parameters()[source]¶ Abstract method to create the registration parameters over which should be optimized. They need to be of type torch Parameter()

-

get_registration_parameters_and_buffers()[source]¶ Method to return the registration parameters and buffers (i.e., the model state directory)

Returns: returns the registration parameters and buffers

-

get_registration_parameters()[source]¶ Abstract method to return the registration parameters

Returns: returns the registration parameters

-

get_individual_registration_parameters()[source]¶ Returns the parameters that have not been declared shared for optimization. This can for example be the parameters that of a given registration model without shared parameters of a smoother.

Same as get_shared_registration_parameters, but does also include buffers which may not be parameters.

Returns:

Returns the parameters that have been declared shared for optimization. This can for example be parameters of a smoother that are shared between registrations.

-

set_registration_parameters(pars, sz, spacing)[source]¶ Abstract method to set the registration parameters externally. This can for example be useful when the optimizer should be initialized at a specific value

Parameters: - pars – dictionary of registration parameters

- sz – size of the image the parameter corresponds to

- spacing – spacing of the image the parameter corresponds to

-

set_individual_registration_parameters(pars)[source]¶ Allows to only set the registration parameters which are not shared between registrations.

Parameters: pars – dictionary containing the parameters Returns: n/a

Allows to only set the shared registration parameters

Parameters: pars – dictionary containing the parameters Returns: n/a

Loads the shared part of a state dictionary

Parameters: sd – shared state dictionary Returns: n/a

Returns the shared part of the state dictionary

Returns:

-

downsample_registration_parameters(desiredSz)[source]¶ Method to downsample the registration parameters spatially to a desired size. Should be overwritten by a derived class.

Parameters: desiredSz – desired size in XxYxZ format, e.g., [50,100,40] Returns: should return a tuple (downsampled_image,downsampled_spacing)

-

upsample_registration_parameters(desiredSz)[source]¶ Method to upsample the registration parameters spatially to a desired size. Should be overwritten by a derived class.

Parameters: desiredSz – desired size in XxYxZ format, e.g., [50,100,40] Returns: should return a tuple (upsampled_image,upsampled_spacing)

-

get_parameter_image_and_name_to_visualize(ISource=None)[source]¶ Convenience function to specify an image that should be visualized including its caption. This will typically be related to the parameter of a model. This method should be overwritten by a derived class

Parameters: ISource – (optional) source image as this is part of the initial condition for some parameterizations Returns: should return a tuple (image,desired_caption)

-

-

class

mermaid.registration_networks.RegistrationNetDisplacement(sz, spacing, params)[source]¶ Abstract base-class for all the registration networks without time-integration which directly estimate a deformation field.

-

d= None¶ displacement field that will be optimized over

-

spline_order= None¶ order of the spline for interpolations

-

create_registration_parameters()[source]¶ Creates the displacement field that is being optimized over

Returns: displacement field parameter

-

get_parameter_image_and_name_to_visualize(ISource=None)[source]¶ Returns the displacement field parameter magnitude image and a name

Returns: Returns the tuple (displacement_magnitude_image,name)

-

upsample_registration_parameters(desiredSz)[source]¶ Upsamples the displacement field to a desired size

Parameters: desiredSz – desired size of the upsampled displacement field Returns: returns a tuple (upsampled_state,upsampled_spacing)

-

downsample_registration_parameters(desiredSz)[source]¶ Downsamples the displacement field to a desired size

Parameters: desiredSz – desired size of the downsampled displacement field Returns: returns a tuple (downsampled_state,downsampled_spacing)

-

forward(phi, I0_source, phi_inv=None, variables_from_optimizer=None)[source]¶ Solved the map-based equation forward

Parameters: - phi – initial condition for the map

- I0_source – not used

- phi_inv – inverse intial map (not used)

- variables_from_optimizer – allows passing variables (as a dict from the optimizer; e.g., the current iteration)

Returns: returns the map with the displacement subtracted

-

-

class

mermaid.registration_networks.RegistrationNetTimeIntegration(sz, spacing, params)[source]¶ Abstract base-class for all the registration networks with time-integration

-

tFrom= None¶ time to solve a model from

-

tTo= None¶ time to solve a model to

-

use_CFL_clamping= None¶ If the model uses time integration, then CFL clamping is used

-

env= None¶ settings for the task environment of the solver or smoother

-

set_integration_tfrom(tFrom)[source]¶ Sets the starging time for integration

Parameters: tFrom – starting time Returns: n/a

-

get_integraton_tfrom()[source]¶ Gets the starting integration time (typically 0)

Returns: starting integration time

-

set_integration_tto(tTo)[source]¶ Sets the time up to which to integrate

Parameters: tTo – time to integrate to Returns: n/a

-

-

class

mermaid.registration_networks.SVFNet(sz, spacing, params)[source]¶ Base class for SVF-type registrations. Provides a velocity field (as a parameter) and an integrator

-

v= None¶ velocity field that will be optimized over

-

integrator= None¶ integrator to do the time-integration

-

spline_order= None¶ order of the spline for interpolations

-

create_registration_parameters()[source]¶ Creates the velocity field that is being optimized over

Returns: velocity field parameter

-

get_parameter_image_and_name_to_visualize(ISource=None)[source]¶ Returns the velocity field parameter magnitude image and a name

Returns: Returns the tuple (velocity_magnitude_image,name)

-

-

class

mermaid.registration_networks.SVFImageNet(sz, spacing, params)[source]¶ Specialization for SVF-based image registration

-

create_integrator()[source]¶ Creates an integrator for the advection equation of the image

Returns: returns this integrator

-

forward(I, variables_from_optimizer=None)[source]¶ Solves the image-based advection equation

Parameters: - I – initial condition for the image

- variables_from_optimizer – allows passing variables (as a dict from the optimizer; e.g., the current iteration)

Returns: returns the image at the final time (tTo)

-

-

class

mermaid.registration_networks.SVFQuasiMomentumNet(sz, spacing, params)[source]¶ Attempt at parameterizing SVF with a momentum-like vector field (EXPERIMENTAL, not working yet)

-

m= None¶ momentum parameter

-

smoother= None¶ smoother to go from momentum to velocity

-

v= None¶ corresponding velocity field

-

integrator= None¶ integrator to solve the forward model

-

spline_order= None¶ order of the spline for interpolations

-

write_parameters_to_settings()[source]¶ To be overwritten to write back optimized parameters to the setting where they came from

-

get_custom_optimizer_output_string()[source]¶ Can be overwritten by a method to allow for additional optimizer output (on top of the energy values)

Returns:

-

get_custom_optimizer_output_values()[source]¶ Can be overwritten by a method to allow for additional optimizer history output (should in most cases go hand-in-hand with the string returned by get_custom_optimizer_output_string()

Returns:

-

create_registration_parameters()[source]¶ Creates the registration parameters (the momentum field) and returns them

Returns: momentum field

-

get_parameter_image_and_name_to_visualize(ISource=None)[source]¶ Returns the momentum magnitude image and \(|m|\) as the image caption

Returns: Returns a tuple (magnitude_m,name)

-

upsample_registration_parameters(desiredSz)[source]¶ Method to upsample the registration parameters spatially to a desired size. Should be overwritten by a derived class.

Parameters: desiredSz – desired size in XxYxZ format, e.g., [50,100,40] Returns: should return a tuple (upsampled_image,upsampled_spacing)

-

downsample_registration_parameters(desiredSz)[source]¶ Method to downsample the registration parameters spatially to a desired size. Should be overwritten by a derived class.

Parameters: desiredSz – desired size in XxYxZ format, e.g., [50,100,40] Returns: should return a tuple (downsampled_image,downsampled_spacing)

-

-

class

mermaid.registration_networks.SVFQuasiMomentumImageNet(sz, spacing, params)[source]¶ Specialization for image registation

-

create_integrator()[source]¶ Creates the integrator that solve the advection equation (based on the smoothed momentum) :return: returns this integrator

-

forward(I, variables_from_optimizer=None)[source]¶ Solves the model by first smoothing the momentum field and then using it as the velocity for the advection equation

Parameters: - I – initial condition for the image

- variables_from_optimizer – allows passing variables (as a dict from the optimizer; e.g., the current iteration)

Returns: returns the image at the final time point (tTo)

-

-

class

mermaid.registration_networks.RegistrationLoss(sz_sim, spacing_sim, sz_model, spacing_model, params)[source]¶ Abstract base class to define a loss function for image registration

-

spacing_sim= None¶ image/map spacing for the similarity measure part of the loss function

-

spacing_model= None¶ spacing for any model parameters (typically for the regularization part of the loss function)

-

sz_sim= None¶ image size for the similarity measure part of the loss function

-

sz_model= None¶ image size for the model parameters (typically for the regularization part of the loss function)

-

params= None¶ ParameterDict() paramters

-

smFactory= None¶ factory to create similarity measures on the fly

-

similarityMeasure= None¶ the similarity measure itself

-

env= None¶ settings for the task environment of the solver or smoother

-

set_dictionary_to_pass_to_smoother(d)[source]¶ The values will be transfered to the default dictionary (shallow is fine).

Parameters: d – dictionary to pass to smoother Returns: dictionary

-

add_similarity_measure(simName, simMeasure)[source]¶ To add a custom similarity measure to the similarity measure factory

Parameters: - simName – desired name of the similarity measure (string)

- simMeasure – similarity measure itself (to instantiate an object)

-

compute_similarity_energy(I1_warped, I1_target, I0_source=None, phi=None, variables_from_forward_model=None, variables_from_optimizer=None)[source]¶ Computing the image matching energy based on the selected similarity measure

Parameters: - I1_warped – warped image at time tTo

- I1_target – target image to register to

- I0_source – source image at time 0 (typically not used)

- phi – map to warp I0_source to target space (typically not used)

- variables_from_forward_model – allows passing in additional variables (intended to pass variables between the forward modell and the loss function)

- variables_from_optimizer – allows passing variables (as a dict from the optimizer; e.g., the current iteration)

Returns: returns the value for image similarity energy

-

compute_regularization_energy(I0_source, variables_from_forward_model=None, variables_from_optimizer=None)[source]¶ Abstract method computing the regularization energy based on the registration parameters and (if desired) the initial image

Parameters: - I0_source – Initial image

- variables_from_forward_model – allows passing in additional variables (intended to pass variables between the forward modell and the loss function)

- variables_from_optimizer – allows passing variables (as a dict from the optimizer; e.g., the current iteration)

Returns: should return the value for the regularization energy

-

-

class

mermaid.registration_networks.RegistrationImageLoss(sz_sim, spacing_sim, sz_model, spacing_model, params)[source]¶ Specialization for image-based registration losses

-

get_energy(I1_warped, I0_source, I1_target, variables_from_forward_model=None, variables_from_optimizer=None)[source]¶ Computes the overall registration energy as E = E_sim + E_reg

Parameters: - I1_warped – warped image

- I0_source – source image

- I1_target – target image

- variables_from_forward_model – allows passing in additional variables (intended to pass variables between the forward modell and the loss function)

- variables_from_optimizer – allows passing variables (as a dict from the optimizer; e.g., the current iteration)

Returns: return the energy value

-

forward(I1_warped, I0_source, I1_target, variables_from_forward_model=None, variables_from_optimizer=None)[source]¶ Computes the loss by evaluating the energy

Parameters: - I1_warped – warped image

- I0_source – source image

- I1_target – target image

- variables_from_forward_model – allows passing in additional variables (intended to pass variables between the forward modell and the loss function)

- variables_from_optimizer – allows passing variables (as a dict from the optimizer; e.g., the current iteration)

Returns: tuple: overall energy, similarity energy, regularization energy

-

-

class

mermaid.registration_networks.RegistrationMapLoss(sz_sim, spacing_sim, sz_model, spacing_model, params)[source]¶ Specialization for map-based registration losses

-

spline_order= None¶ order of the spline for interpolations

-

get_energy(phi0, phi1, I0_source, I1_target, lowres_I0, variables_from_forward_model=None, variables_from_optimizer=None)[source]¶ Compute the energy by warping the source image via the map and then comparing it to the target image

Parameters: - phi0 – map (initial map from which phi1 is computed by integration; likely the identity map)

- phi1 – map (mapping the source image to the target image, defined in the space of the target image)

- I0_source – source image

- I1_target – target image

- lowres_I0 – for map with reduced resolution this is the downsampled source image, may be needed to compute the regularization energy

- variables_from_forward_model – allows passing in additional variables (intended to pass variables between the forward modell and the loss function)

- variables_from_optimizer – allows passing variables (as a dict from the optimizer; e.g., the current iteration)

Returns: registration energy

-

forward(phi0, phi1, I0_source, I1_target, lowres_I0, variables_from_forward_model=None, variables_from_optimizer=None)[source]¶ Compute the loss function value by evaluating the registration energy

Parameters: - phi0 – map (initial map from which phi1 is computed by integration; likely the identity map)

- phi1 – map (mapping the source image to the target image, defined in the space of the target image)

- I0_source – source image

- I1_target – target image

- lowres_I0 – for map with reduced resolution this is the downsampled source image, may be needed to compute the regularization energy

- variables_from_forward_model – allows passing in additional variables (intended to pass variables between the forward modell and the loss function)

- variables_from_optimizer – allows passing variables (as a dict from the optimizer; e.g., the current iteration)

Returns: tuple: overall energy, similarity energy, regularization energy

-

-

class

mermaid.registration_networks.SVFImageLoss(v, sz_sim, spacing_sim, sz_model, spacing_model, params)[source]¶ Loss specialization for image-based SVF

-

v= None¶ veclocity field parameter

-

regularizer= None¶ regularizer to compute the regularization energy

-

compute_regularization_energy(I0_source, variables_from_forward_model=None, variables_from_optimizer=False)[source]¶ Computing the regularization energy

Parameters: - I0_source – source image (not used)

- variables_from_forward_model – (not used)

- variables_from_optimizer – allows passing variables (as a dict from the optimizer; e.g., the current iteration)

Returns: returns the regularization energy

-

-

class

mermaid.registration_networks.SVFQuasiMomentumImageLoss(m, sz_sim, spacing_sim, sz_model, spacing_model, params)[source]¶ Loss function specialization for the image-based quasi-momentum SVF implementation. Essentially the same as for SVF but has to smooth the momentum field first to obtain the velocity field.

-

m= None¶ vector momentum

-

regularizer= None¶ regularizer to compute the regularization energy

-

smoother= None¶ smoother to convert from momentum to velocity

-

compute_regularization_energy(I0_source, variables_from_forward_model=None, variables_from_optimizer=None)[source]¶ Compute the regularization energy from the momentum

Parameters: - I0_source – not used

- variables_from_forward_model – (not used)

- variables_from_optimizer – allows passing variables (as a dict from the optimizer; e.g., the current iteration)

Returns: returns the regularization energy

-

-

class

mermaid.registration_networks.SVFMapNet(sz, spacing, params, compute_inverse_map=False)[source]¶ Network specialization to a map-based SVF

-

compute_inverse_map= None¶ If set to True the inverse map is computed on the fly

-

create_integrator()[source]¶ Creates an integrator to solve a map-based advection equation

Returns: returns this integrator

-

forward(phi, I0_source, phi_inv=None, variables_from_optimizer=None)[source]¶ Solved the map-based equation forward

Parameters: - phi – initial condition for the map

- I0_source – not used

- phi_inv – inverse initial map

- variables_from_optimizer – allows passing variables (as a dict from the optimizer; e.g., the current iteration)

- variables_from_optimizer – allows passing variables (as a dict from the optimizer; e.g., the current iteration)

Returns: returns the map at time tTo

-

-

class

mermaid.registration_networks.SVFMapLoss(v, sz_sim, spacing_sim, sz_model, spacing_model, params)[source]¶ Specialization of the loss function for SVF to a map-based solution

-

v= None¶ velocity field parameter

-

regularizer= None¶ regularizer to compute the regularization energy

-

compute_regularization_energy(I0_source, variables_from_forward_model=None, variables_from_optimizer=None)[source]¶ Computes the regularizaton energy from the velocity field parameter

Parameters: - I0_source – not used

- variables_from_forward_model – (not used)

- variables_from_optimizer – allows passing variables (as a dict from the optimizer; e.g., the current iteration)

Returns: returns the regularization energy

-

-

class

mermaid.registration_networks.DiffusionMapLoss(d, sz_sim, spacing_sim, sz_model, spacing_model, params)[source]¶ Specialization of the loss function for displacement-based registration to diffusion registration

-

d= None¶ displacement field parameter

-

regularizer= None¶ regularizer to compute the regularization energy

-

compute_regularization_energy(I0_source, variables_from_forward_model=None, variables_from_optimizer=None)[source]¶ Computes the regularizaton energy from the velocity field parameter

Parameters: - I0_source – not used

- variables_from_forward_model – (not used)

- variables_from_optimizer – allows passing variables (as a dict from the optimizer; e.g., the current iteration)

Returns: returns the regularization energy

-

-

class

mermaid.registration_networks.TotalVariationMapLoss(d, sz_sim, spacing_sim, sz_model, spacing_model, params)[source]¶ Specialization of the loss function for displacement-based registration to diffusion registration

-

d= None¶ displacement field parameter

-

regularizer= None¶ regularizer to compute the regularization energy

-

compute_regularization_energy(I0_source, variables_from_forward_model=None, variables_from_optimizer=None)[source]¶ Computes the regularizaton energy from the velocity field parameter

Parameters: - I0_source – not used

- variables_from_forward_model – (not used)

- variables_from_optimizer – allows passing variables (as a dict from the optimizer; e.g., the current iteration)

Returns: returns the regularization energy

-

-

class

mermaid.registration_networks.CurvatureMapLoss(d, sz_sim, spacing_sim, sz_model, spacing_model, params)[source]¶ Specialization of the loss function for displacement-based registration to diffusion registration

-

d= None¶ displacement field parameter

-

regularizer= None¶ regularizer to compute the regularization energy

-

compute_regularization_energy(I0_source, variables_from_forward_model=None, variables_from_optimizer=None)[source]¶ Computes the regularizaton energy from the velocity field parameter

Parameters: - I0_source – not used

- variables_from_forward_model – (not used)

- variables_from_optimizer – allows passing variables (as a dict from the optimizer; e.g., the current iteration)

Returns: returns the regularization energy

-

-

class

mermaid.registration_networks.AffineMapNet(sz, spacing, params, compute_inverse_map=False)[source]¶ Registration network for affine transformation

-

create_registration_parameters()[source]¶ Abstract method to create the registration parameters over which should be optimized. They need to be of type torch Parameter()

-

get_parameter_image_and_name_to_visualize(ISource=None)[source]¶ Returns the velocity field parameter magnitude image and a name

Returns: Returns the tuple (velocity_magnitude_image,name)

-

upsample_registration_parameters(desiredSz)[source]¶ Upsamples the afffine parameters to a desired size (ie., just returns them)

Parameters: desiredSz – desired size of the upsampled image Returns: returns a tuple (upsampled_state,upsampled_spacing)

-

downsample_registration_parameters(desiredSz)[source]¶ Downsamples the affine parameters to a desired size (ie., just returns them)

Parameters: desiredSz – desired size of the downsampled image Returns: returns a tuple (downsampled_state,downsampled_spacing)

-

forward(phi, I0_source, phi_inv=None, variables_from_optimizer=None)[source]¶ Solved the map-based equation forward

Parameters: - phi – initial condition for the map

- I0_source – not used

- phi_inv – inverse initial map (not used)

- variables_from_optimizer – allows passing variables (as a dict from the optimizer; e.g., the current iteration)

Returns: returns the map at time tTo

-

-

class

mermaid.registration_networks.AffineMapLoss(Ab, sz_sim, spacing_sim, sz_model, spacing_model, params)[source]¶ Specialization of the loss function for a map-based affine transformation

-

Ab= None¶ affine parameters

-

compute_regularization_energy(I0_source, variables_from_forward_model=None, variables_from_optimizer=None)[source]¶ Computes the regularizaton energy from the affine parameter

Parameters: - I0_source – not used

- variables_from_forward_model – (not used)

- variables_from_optimizer – allows passing variables (as a dict from the optimizer; e.g., the current iteration)

Returns: returns the regularization energy

-

-

class

mermaid.registration_networks.ShootingVectorMomentumNet(sz, spacing, params)[source]¶ Methods using vector-momentum-based shooting

-

smoother= None¶ smoother

-

integrator= None¶ integrator to solve EPDiff variant

-

spline_order= None¶ order of the spline for interpolations

-

initial_velocity= None¶ the velocity field at t0, saved during map computation and used during loss computation

-

sparams= None¶ settings for the vector momentum related methods

-

velocity_mask= None¶ a continuous image-size float tensor mask, to force zero velocity at the boundary

-

write_parameters_to_settings()[source]¶ To be overwritten to write back optimized parameters to the setting where they came from

-

get_custom_optimizer_output_string()[source]¶ Can be overwritten by a method to allow for additional optimizer output (on top of the energy values)

Returns:

-

get_custom_optimizer_output_values()[source]¶ Can be overwritten by a method to allow for additional optimizer history output (should in most cases go hand-in-hand with the string returned by get_custom_optimizer_output_string()

Returns:

-

get_variables_to_transfer_to_loss_function()[source]¶ This is a function that can be overwritten by models to allow to return variables which are also needed for the computation of the loss function. Returns None by default, but can for example be used to pass parameters or smoothers which are needed for the model itself and its loss. By convention these variables should be returned as a dictionary.

Returns:

-

create_registration_parameters()[source]¶ Creates the vector momentum parameter

Returns: Returns the vector momentum parameter

-

get_parameter_image_and_name_to_visualize(ISource=None)[source]¶ Creates a magnitude image for the momentum and returns it with name \(|m|\)

Returns: Returns tuple (m_magnitude_image,name)

-

-

class

mermaid.registration_networks.LDDMMShootingVectorMomentumImageNet(sz, spacing, params)[source]¶ Specialization of vector-momentum LDDMM for direct image matching.

-

integrator= None¶ integrator to solve EPDiff variant

-

create_integrator()[source]¶ Creates integrator to solve EPDiff together with an advevtion equation for the image

Returns: returns this integrator

-

forward(I, variables_from_optimizer=None)[source]¶ Integrates EPDiff plus advection equation for image forward

Parameters: - I – Initial condition for image

- variables_from_optimizer – allows passing variables (as a dict from the optimizer; e.g., the current iteration)

Returns: returns the image at time tTo

-

-

class

mermaid.registration_networks.LDDMMShootingVectorMomentumImageLoss(m, sz_sim, spacing_sim, sz_model, spacing_model, params)[source]¶ Specialization of the image loss to vector-momentum LDDMM

-

m= None¶ momentum

-

compute_regularization_energy(I0_source, variables_from_forward_model, variables_from_optimizer=None)[source]¶ Computes the regularzation energy based on the inital momentum

Parameters: - I0_source – not used

- variables_from_forward_model – allows passing in additional variables (intended to pass variables between the forward modell and the loss function)

- variables_from_optimizer – allows passing variables (as a dict from the optimizer; e.g., the current iteration)

Returns: regularization energy

-

-

class

mermaid.registration_networks.SVFVectorMomentumImageNet(sz, spacing, params)[source]¶ Specialization of vector momentum based stationary velocity field image-based matching

-

integrator= None¶ integrator to solve EPDiff variant

-

-

class

mermaid.registration_networks.SVFVectorMomentumImageLoss(m, sz_sim, spacing_sim, sz_model, spacing_model, params)[source]¶ Specialization of the loss to vector-momentum stationary velocity field on images

-

m= None¶ vector momentum

-

compute_regularization_energy(I0_source, variables_from_forward_model, variables_from_optimizer=None)[source]¶ Computes the regularization energy from the initial vector momentum as obtained from the vector momentum

Parameters: - I0_source – source image

- variables_from_forward_model – allows passing in additional variables (intended to pass variables between the forward modell and the loss function)

- variables_from_optimizer – allows passing variables (as a dict from the optimizer; e.g., the current iteration)

Returns: returns the regularization energy

-

-

class

mermaid.registration_networks.LDDMMShootingVectorMomentumMapNet(sz, spacing, params, compute_inverse_map=False)[source]¶ Specialization for map-based vector-momentum where the map itself is advected

-

compute_inverse_map= None¶ If set to True the inverse map is computed on the fly

-

integrator= None¶ integrator to solve EPDiff variant

-

create_integrator()[source]¶ Creates an integrator for EPDiff + advection equation for the map

Returns: returns this integrator

-

forward(phi, I0_source, phi_inv=None, variables_from_optimizer=None)[source]¶ Solves EPDiff + advection equation forward and returns the map at time tTo

Parameters: - phi – initial condition for the map

- I0_source – not used

- phi_inv – inverse initial map

- variables_from_optimizer – allows passing variables (as a dict from the optimizer; e.g., the current iteration)

Returns: returns the map at time tTo

-

-

class

mermaid.registration_networks.LDDMMShootingVectorMomentumMapLoss(m, sz_sim, spacing_sim, sz_model, spacing_model, params)[source]¶ Specialization of the loss for map-based vector momumentum. Image similarity is computed based on warping the source image with the advected map.

-

m= None¶ vector momentum

-

compute_regularization_energy(I0_source, variables_from_forward_model, variables_from_optimizer=None)[source]¶ Commputes the regularization energy from the initial vector momentum

Parameters: - I0_source – not used

- variables_from_forward_model – allows passing in additional variables (intended to pass variables between the forward modell and the loss function)

- variables_from_optimizer – allows passing variables (as a dict from the optimizer; e.g., the current iteration)

Returns: returns the regularization energy

-

-

class

mermaid.registration_networks.SVFVectorMomentumMapNet(sz, spacing, params, compute_inverse_map=False)[source]¶ Specialization of vector momentum based stationary velocity field map-based matching

-

compute_inverse_map= None¶ If set to True the inverse map is computed on the fly

-

integrator= None¶ integrator to solve EPDiff variant

-

create_integrator()[source]¶ Creates an integrator integrating the vector momentum conservation law and an advection equation for the map

Returns: returns this integrator

-

forward(phi, I0_source, phi_inv=None, variables_from_optimizer=None)[source]¶ Solved the vector momentum forward equation and returns the map at time tTo

Parameters: - phi – initial map

- I0_source – not used

- phi_inv – initial inverse map

- variables_from_optimizer – allows passing variables (as a dict from the optimizer; e.g., the current iteration)

Returns: map at time tTo

-

-

class

mermaid.registration_networks.SVFVectorMomentumMapLoss(m, sz_sim, spacing_sim, sz_model, spacing_model, params)[source]¶ Specialization of loss for vector momentum based stationary velocity field

-

m= None¶ vector momentum

-

compute_regularization_energy(I0_source, variables_from_forward_model, variables_from_optimizer=None)[source]¶ Computes the regularization energy from the initial vector momentum

Parameters: - I0_source – source image

- variables_from_forward_model – allows passing in additional variables (intended to pass variables between the forward modell and the loss function)

- variables_from_optimizer – allows passing variables (as a dict from the optimizer; e.g., the current iteration)

Returns: returns the regularization energy

-

-

class

mermaid.registration_networks.AdaptiveSmootherMomentumMapBasicNet(sz, spacing, params, compute_inverse_map=False)[source]¶ Mehtods using map-based vector-momentum and adaptive regularizers

-

compute_inverse_map= None¶ If set to True the inverse map is computed on the fly

-

create_single_local_smoother(sz, spacing)[source]¶ Creates local single gaussian smoother, which is for smoothing pre-weights

Returns:

-

create_local_filter_weights_parameters()[source]¶ Creates parameters of the regularizer, a weight vector for multi-gaussian smoother at each position

Returns: Returns the vector momentum parameter

-

upsample_registration_parameters(desiredSz)[source]¶ Upsamples the vector-momentum and regularizer parameter

Parameters: desiredSz – desired size of the upsampled momentum Returns: Returns tuple (upsampled_state,upsampled_spacing)

-

downsample_registration_parameters(desiredSz)[source]¶ Downsamples the vector-momentum and regularizer parameter

Parameters: desiredSz – desired size of the downsampled momentum Returns: Returns tuple (downsampled_state,downsampled_spacing)

-

forward(phi, I0_source, phi_inv=None, variables_from_optimizer=None)[source]¶ Defines the computation performed at every call.

Should be overridden by all subclasses.

Note

Although the recipe for forward pass needs to be defined within this function, one should call the

Moduleinstance afterwards instead of this since the former takes care of running the registered hooks while the latter silently ignores them.

-

get_parameter_image_and_name_to_visualize(ISource=None, use_softmax=False, output_preweight=True)[source]¶ visualize the regularizer parameters

Parameters: - ISource – not used

- use_softmax – true: apply softmax to get pre-weight

- output_preweight – true: output the pre-weight of the regularizer , false: output the weight of the regualrizer

Returns:

-

-

class

mermaid.registration_networks.AdaptiveSmootherMomentumMapBasicLoss(m, sz_sim, spacing_sim, sz_model, spacing_model, params)[source]¶ Specialization of the loss for adaptive map-based vector momumentum. Image similarity is computed based on warping the source image with the advected map.

-

m= None¶ vector momentum

-

compute_regularization_energy(I0_source, variables_from_forward_model, variables_from_optimizer=None)[source]¶ Commputes the regularization energy from the initial vector momentum and the adaptive smoother

Parameters: - I0_source – source image

- variables_from_forward_model – allows passing in additional variables (intended to pass variables between the forward modell and the loss function)

- variables_from_optimizer – allows passing variables (as a dict from the optimizer; e.g., the current iteration)

Returns: returns the regularization energy

-

-

class

mermaid.registration_networks.SVFVectorAdaptiveSmootherMomentumMapNet(sz, spacing, params, compute_inverse_map=False)[source]¶ Specialization of vector momentum based stationary velocity field with adaptive regularizer

-

compute_inverse_map= None¶ If set to True the inverse map is computed on the fly

-

integrator= None¶ integrator to solve EPDiff variant

-

create_integrator()[source]¶ Creates an integrator integrating the vector momentum conservation law, an advection equation for the map with adaptive regularizer

Returns: returns this integrator

-

forward(phi, I0_source, phi_inv=None, variables_from_optimizer=None)[source]¶ Solved the vector momentum forward equation and returns the map at time tTo

Parameters: - phi – initial map

- I0_source – source image

- phi_inv – initial inverse map

- variables_from_optimizer – allows passing variables (as a dict from the optimizer; e.g., the current iteration)

Returns: map at time tTo

-

-

class

mermaid.registration_networks.SVFVectorAdaptiveSmootherMomentumMapLoss(m, sz_sim, spacing_sim, sz_model, spacing_model, params)[source]¶ Specialization of the loss to scalar-momentum LDDMM on images

-

class

mermaid.registration_networks.LDDMMAdaptiveSmootherMomentumMapNet(sz, spacing, params, compute_inverse_map=False)[source]¶ Specialization of LDDMM with adaptive regularizer

-

integrator= None¶ integrator to solve EPDiff variant

-

create_integrator()[source]¶ Creates an integrator for generalized EPDiff + advection equation for the map

Returns: returns this integrator

-

forward(phi, I0_source, phi_inv=None, variables_from_optimizer=None)[source]¶ Solves generalized EPDiff + advection equation forward and returns the map at time tTo

Parameters: - phi – initial condition for the map

- I0_source – source image

- variables_from_optimizer – allows passing variables (as a dict from the optimizer; e.g., the current iteration)

Returns: returns the map at time tTo

-

-

class

mermaid.registration_networks.LDDMMAdaptiveSmootherMomentumMapLoss(m, sz_sim, spacing_sim, sz_model, spacing_model, params)[source]¶ Specialization of the loss for map-based vector momumentum. Image similarity is computed based on warping the source image with the advected map.

-

class

mermaid.registration_networks.OneStepMapNet(sz, spacing, params, compute_inverse_map=False)[source]¶ Specialization for single step registration

-

compute_inverse_map= None¶ If set to True the inverse map is computed on the fly

-

integrator= None¶ integrator to solve EPDiff variant

-

forward(phi, I0_source, phi_inv=None, variables_from_optimizer=None)[source]¶ Smooth and return the transformation map

Parameters: - phi – initial condition for the map

- I0_source – not used

- variables_from_optimizer – allows passing variables (as a dict from the optimizer; e.g., the current iteration)

Returns: returns the map at time tTo

-

-

class

mermaid.registration_networks.OneStepMapLoss(m, sz_sim, spacing_sim, sz_model, spacing_model, params)[source]¶ Specialization of the loss for OneStepMapLoss.

-

m= None¶ vector momentum

-

compute_regularization_energy(I0_source, variables_from_forward_model, variables_from_optimizer=None)[source]¶ Commputes the regularization energy

Parameters: - I0_source – not used

- variables_from_forward_model – allows passing in additional variables (intended to pass variables between the forward modell and the loss function)

- variables_from_optimizer – allows passing variables (as a dict from the optimizer; e.g., the current iteration)

Returns: returns the regularization energy

-

-

class

mermaid.registration_networks.ShootingScalarMomentumNet(sz, spacing, params)[source]¶ Specialization of the registration network to registrations with scalar momentum. Provides an integrator and the scalar momentum parameter.

-

lam= None¶ scalar momentum

-

smoother= None¶ smoother

-

integrator= None¶ integrator to integrate EPDiff and associated equations (for image or map)

-

spline_order= None¶ order of the spline for interpolations

-

write_parameters_to_settings()[source]¶ To be overwritten to write back optimized parameters to the setting where they came from

-

get_custom_optimizer_output_string()[source]¶ Can be overwritten by a method to allow for additional optimizer output (on top of the energy values)

Returns:

-

get_custom_optimizer_output_values()[source]¶ Can be overwritten by a method to allow for additional optimizer history output (should in most cases go hand-in-hand with the string returned by get_custom_optimizer_output_string()

Returns:

-

get_variables_to_transfer_to_loss_function()[source]¶ This is a function that can be overwritten by models to allow to return variables which are also needed for the computation of the loss function. Returns None by default, but can for example be used to pass parameters or smoothers which are needed for the model itself and its loss. By convention these variables should be returned as a dictionary.

Returns:

-

create_registration_parameters()[source]¶ Creates the scalar momentum registration parameter

Returns: Returns this scalar momentum parameter

-

get_parameter_image_and_name_to_visualize(ISource=None)[source]¶ Returns an image of the scalar momentum (magnitude over all channels) and ‘lambda’ as name

Returns: Returns tuple (lamda_magnitude,lambda_name)

-

-

class

mermaid.registration_networks.SVFScalarMomentumImageNet(sz, spacing, params)[source]¶ Specialization of scalar-momentum SVF image-based matching

-

class

mermaid.registration_networks.SVFScalarMomentumImageLoss(lam, sz_sim, spacing_sim, sz_model, spacing_model, params)[source]¶ Specialization of the loss to scalar-momentum LDDMM on images

-

lam= None¶ scalar momentum

-

develop_smoother= None¶ smoother to go from momentum to velocity

-

compute_regularization_energy(I0_source, variables_from_forward_model, variables_from_optimizer=None)[source]¶ Computes the regularization energy from the initial vector momentum as obtained from the scalar momentum

Parameters: - I0_source – source image

- variables_from_forward_model – allows passing in additional variables (intended to pass variables between the forward modell and the loss function)

- variables_from_optimizer – allows passing variables (as a dict from the optimizer; e.g., the current iteration)

Returns: returns the regularization energy

-

-

class

mermaid.registration_networks.LDDMMShootingScalarMomentumImageNet(sz, spacing, params)[source]¶ Specialization of scalar-momentum LDDMM to image-based matching

-

class

mermaid.registration_networks.LDDMMShootingScalarMomentumImageLoss(lam, sz_sim, spacing_sim, sz_model, spacing_model, params)[source]¶ Specialization of the loss to scalar-momentum LDDMM on images

-

lam= None¶ scalar momentum

-

develop_smoother= None¶ smoother to go from momentum to velocity

-

compute_regularization_energy(I0_source, variables_from_forward_model, variables_from_optimizer=None)[source]¶ Computes the regularization energy from the initial vector momentum as obtained from the scalar momentum

Parameters: - I0_source – source image

- variables_from_forward_model – allows passing in additional variables (intended to pass variables between the forward modell and the loss function)

- variables_from_optimizer – allows passing variables (as a dict from the optimizer; e.g., the current iteration)

Returns: returns the regularization energy

-

-

class

mermaid.registration_networks.LDDMMShootingScalarMomentumMapNet(sz, spacing, params, compute_inverse_map=False)[source]¶ Specialization of scalar-momentum LDDMM registration to map-based image matching

-

compute_inverse_map= None¶ If set to True the inverse map is computed on the fly

-

create_integrator()[source]¶ Creates an integrator integrating the scalar conservation law for the scalar momentum, the advection equation for the image and the advection equation for the map,

Returns: returns this integrator

-

forward(phi, I0_source, phi_inv=None, variables_from_optimizer=None)[source]¶ Solves the scalar conservation law and the two advection equations forward in time.

Parameters: - phi – initial condition for the map

- I0_source – initial condition for the image

- phi_inv – initial condition for the inverse map

- variables_from_optimizer – allows passing variables (as a dict from the optimizer; e.g., the current iteration)

Returns: returns the map at time tTo

-

-

class

mermaid.registration_networks.LDDMMShootingScalarMomentumMapLoss(lam, sz_sim, spacing_sim, sz_model, spacing_model, params)[source]¶ Specialization of the loss function to scalar-momentum LDDMM for maps.

-

lam= None¶ scalar momentum

-

develop_smoother= None¶ smoother to go from momentum to velocity for development configuration

-

compute_regularization_energy(I0_source, variables_from_forward_model, variables_from_optimizer=None)[source]¶ Computes the regularizaton energy from the initial vector momentum as computed from the scalar momentum

Parameters: - I0_source – initial image

- variables_from_forward_model – allows passing in additional variables (intended to pass variables between the forward modell and the loss function)

- variables_from_optimizer – allows passing variables (as a dict from the optimizer; e.g., the current iteration)

Returns: returns the regularization energy

-

-

class

mermaid.registration_networks.SVFScalarMomentumMapNet(sz, spacing, params, compute_inverse_map=False)[source]¶ Specialization of scalar-momentum LDDMM to SVF image-based matching

-

compute_inverse_map= None¶ If set to True the inverse map is computed on the fly

-

create_integrator()[source]¶ Creates an integrator integrating the scalar momentum conservation law and an advection equation for the image

Returns: returns this integrator

-

forward(phi, I0_source, phi_inv=None, variables_from_optimizer=None)[source]¶ Solved the scalar momentum forward equation and returns the map at time tTo

Parameters: - I – initial image

- variables_from_optimizer – allows passing variables (as a dict from the optimizer; e.g., the current iteration)

Returns: image at time tTo

-

-

class

mermaid.registration_networks.SVFScalarMomentumMapLoss(lam, sz_sim, spacing_sim, sz_model, spacing_model, params)[source]¶ Specialization of the loss to scalar-momentum LDDMM on images

-

lam= None¶ scalar momentum

-

develop_smoother= None¶ smoother to go from momentum to velocity

-

compute_regularization_energy(I0_source, variables_from_forward_model, variables_from_optimizer=None)[source]¶ Computes the regularization energy from the initial vector momentum as obtained from the scalar momentum

Parameters: - I0_source – source image

- variables_from_forward_model – allows passing in additional variables (intended to pass variables between the forward modell and the loss function)

- variables_from_optimizer – allows passing variables (as a dict from the optimizer; e.g., the current iteration)

Returns: returns the regularization energy

-