Regularization and Smoothing¶

mermaid implements primarily non-parametric registration approaces. These are over-parameterized and therefore require regularization. Various smoothers and regularization options are therefore available.

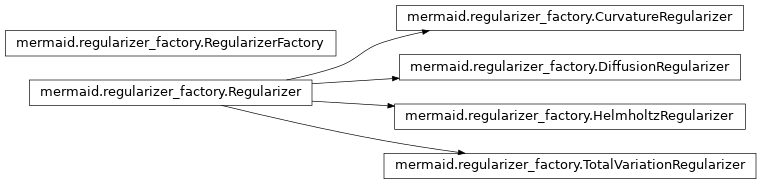

Regularizer factory¶

Package implementing general purpose regularizers.

-

class

mermaid.regularizer_factory.Regularizer(spacing, params)[source]¶ Abstract regularizer base class

-

spacing= None¶ spacing

-

fdt= None¶ finite differencing support

-

volumeElement= None¶ volume element, i.e., volume of a pixel/voxel

-

dim= None¶ spatial dimension

-

params= None¶ parameters

-

-

class

mermaid.regularizer_factory.DiffusionRegularizer(spacing, params)[source]¶ Implements a diffusion regularizer sum of squared gradients of vector field components

-

class

mermaid.regularizer_factory.CurvatureRegularizer(spacing, params)[source]¶ Implements a curvature regularizer sum of squared Laplacians of the vector field components

-

class

mermaid.regularizer_factory.TotalVariationRegularizer(spacing, params)[source]¶ Implements a total variation regularizer sum of Euclidean norms of gradient of vector field components

-

class

mermaid.regularizer_factory.HelmholtzRegularizer(spacing, params)[source]¶ Implements a Helmholtz regularizer \(Reg[v] = \langle\gamma v -\alpha \Delta v, \gamma v -\alpha \Delta v\rangle\)

-

alpha= None¶ penalty for second derivative

-

gamma= None¶ penalty for magnitude

-

-

class

mermaid.regularizer_factory.RegularizerFactory(spacing)[source]¶ Regularizer factory to instantiate a regularizer by name.

-

spacing= None¶ spacing

-

dim= None¶ spatial dimension

-

default_regularizer_type= None¶ type of the regularizer used by default

-

set_default_regularizer_type_to_total_variation()[source]¶ Sets the default regularizer type to totalVariation

-

create_regularizer_by_name(regularizerType, params)[source]¶ Create a regularizer by name. This is a convenience function in the case where there should be no free choice of regularizer (because a particular one is required for a model) :param regularizerType: name of the regularizer: helmholtz|totalVariation|diffusion|curvature :param params: ParameterDict instance :return: returns a regularizer which can compute the regularization energy

-

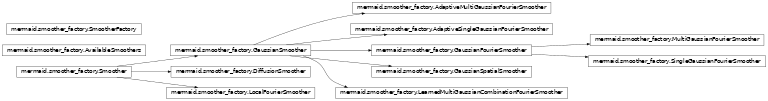

Smoother factory¶

This package implements various types of smoothers.

-

mermaid.smoother_factory.get_compatible_state_dict_for_module(state_dict, module_name, target_state_dict)[source]¶

-

class

mermaid.smoother_factory.Smoother(sz, spacing, params)[source]¶ Abstract base class defining the general smoother interface.

-

sz= None¶ image size

-

spacing= None¶ image spacing

-

fdt= None¶ finite difference support for torch

-

volumeElement= None¶ volume of pixel/voxel

-

dim= None¶ dimension

-

params= None¶ ParameterDict() parameter object holding various configuration options

-

multi_gaussian_optimizer_params= None¶ parameters that will be exposed to the optimizer

-

ISource= None¶ For smoothers that make use of the map, stores the source image to which the map can be applied

-

batch_size= None¶ batch size of what is being smoothed

-

associate_parameters_with_module(module)[source]¶ Associates parameters that should be optimized with the given module.

Parameters: module – module to associate the parameters to Returns: set of parameters that were associated

-

write_parameters_to_settings()[source]¶ If called will take the current parameter state and write it back into the initial setting configuration. This should be called from the model it uses the smoother and will for example allow to write back optimized weitght parameters into the smoother :param module: :return:

-

get_penalty()[source]¶ Can be overwritten by a smoother to return a custom penalty which will be added to the optimization cost. For example to put penalities on smoother paramters that are being optimized over. :return: scalar value; penalty for smoother

-

set_state_dict(state_dict)[source]¶ If the smoother contains a torch state-dict, this function allows setting it externally (to initialize as needed). This is typically not needed as it will be set via a registration model for example, but can be useful for external testing of the smoother. This is also different from smoother parameters and only affects parameters that may be optimized over.

Parameters: state_dict – OrderedDict containing the state information Returns: n/a

-

get_state_dict()[source]¶ If the smoother contains a torch state-dict, this function returns it

Returns: state dict as an OrderedDict

-

set_source_image(ISource)[source]¶ Sets the source image. Useful for smoothers that have as an input the map and need to compute a warped source image.

Parameters: ISource – source image Returns: n/a

-

get_optimization_parameters()[source]¶ Returns the optimizer parameters for a smoother. Returns None of there are none, or if optimization is disabled. :return: Optimizer parameters

-

get_custom_optimizer_output_string()[source]¶ Returns a customized string describing a smoother’s setting. Will be displayed during optimization. Useful to overwrite if optimizing over smoother parameters. :return: string

-

get_custom_optimizer_output_values()[source]¶ Returns a customized dictionary with additional values describing a smoother’s setting. Will become part of the optimization history. Useful to overwrite if optimizing over smoother parameters.

Returns: string

-

apply_smooth(v, vout=None, pars={}, variables_from_optimizer=None, smooth_to_compute_regularizer_energy=False, clampCFL_dt=None)[source]¶ Abstract method to smooth a vector field. Only this method should be overwritten in derived classes.

Parameters: - v – input field to smooth BxCxXxYxZ

- vout – if not None then result is returned in this variable

- pars – dictionary that can contain various extra variables; for smoother this will for example be the current image ‘I’ or the current map ‘phi’. typically not used.

- variables_from_optimizer – variables that can be passed from the optimizer (for example iteration count)

- smooth_to_compute_regularizer_energy – in certain cases smoothing to compute a smooth velocity field should be different than the smoothing for the regularizer (this flag allows smoother implementations reacting to this difference)

- clampCFL_dt – If specified the result of the smoother is clampled according to the CFL condition, based on the given time-step

Returns: should return the a smoothed scalar field, image dimension BxCXxYxZ

-

smooth(v, vout=None, pars={}, variables_from_optimizer=None, smooth_to_compute_regularizer_energy=False, clampCFL_dt=None, multi_output=False)[source]¶ Smoothes a vector field of dimension BxCxXxYxZ,

Parameters: - v – vector field to smooth BxCxXxYxZ

- vout – if not None then result is returned in this variable

- pars – dictionary that can contain various extra variables; for smoother this will for example be the current image ‘I’ or the current map ‘phi’. typically not used.

- variables_from_optimizer – variables that can be passed from the optimizer (for example iteration count)

- smooth_to_compute_regularizer_energy – in certain cases smoothing to compute a smooth velocity field should be different than the smoothing for the regularizer (this flag allows smoother implementations reacting to this difference)

- clampCFL_dt – If specified the result of the smoother is clampled according to the CFL condition, based on the given time-step

Returns: smoothed vector field BxCxXxYxZ

-

-

class

mermaid.smoother_factory.DiffusionSmoother(sz, spacing, params)[source]¶ Smoothing by solving the diffusion equation iteratively.

-

iter= None¶ number of iterations

-

set_iter(iter)[source]¶ Set the number of iterations for the diffusion smoother

Parameters: iter – number of iterations Returns: returns the number of iterations

-

apply_smooth(v, vout=None, pars={}, variables_from_optimizer=None, smooth_to_compute_regularizer_energy=False, clampCFL_dt=None)[source]¶ Smoothes a scalar field of dimension XxYxZ

Parameters: - v – input image

- vout – if not None returns the result in this variable

- pars – dictionary that can contain various extra variables; for smoother this will for example be the current image ‘I’ or the current map ‘phi’. typically not used.

- variables_from_optimizer – variables that can be passed from the optimizer (for example iteration count)

Returns: smoothed image

-

-

class

mermaid.smoother_factory.GaussianSmoother(sz, spacing, params)[source]¶ Gaussian smoothing in the spatial domain (hence, SLOW in high dimensions on the CPU at least).

Todo

Clean up the two implementations (spatial and Fourier of the Gaussian smoothers). In particular, assure that all computions are done in physical coordinates. For now these are just in [-1,1]^d

-

class

mermaid.smoother_factory.GaussianSpatialSmoother(sz, spacing, params)[source]¶ -

k_sz_h= None¶ size of half the smoothing kernel

-

filter= None¶ smoothing filter

-

set_k_sz_h(k_sz_h)[source]¶ Set the size of half the smoothing kernel

Parameters: k_sz_h – size of half the kernel as array

-

get_k_sz_h()[source]¶ Returns the size of half the smoothing kernel

Returns: return half the smoothing kernel size

-

apply_smooth(v, vout=None, pars={}, variables_from_optimizer=None, smooth_to_compute_regularizer_energy=False, clampCFL_dt=None)[source]¶ Smooth the scalar field using Gaussian smoothing in the spatial domain

Parameters: - v – image to smooth

- vout – if not None returns the result in this variable

- pars – dictionary that can contain various extra variables; for smoother this will for example be the current image ‘I’ or the current map ‘phi’. typically not used.

- variables_from_optimizer – variables that can be passed from the optimizer (for example iteration count)

Returns: smoothed image

-

-

class

mermaid.smoother_factory.GaussianFourierSmoother(sz, spacing, params)[source]¶ Performs Gaussian smoothing via convolution in the Fourier domain. Much faster for large dimensions than spatial Gaussian smoothing on the CPU in large dimensions.

-

FFilter= None¶ filter in Fourier domain

-

apply_smooth(v, vout=None, pars={}, variables_from_optimizer=None, smooth_to_compute_regularizer_energy=False, clampCFL_dt=None)[source]¶ Smooth the scalar field using Gaussian smoothing in the Fourier domain

Parameters: - v – image to smooth

- vout – if not None returns the result in this variable

- pars – dictionary that can contain various extra variables; for smoother this will for example be the current image ‘I’ or the current map ‘phi’. typically not used.

- variables_from_optimizer – variables that can be passed from the optimizer (for example iteration count)

Returns: smoothed image

-

-

class

mermaid.smoother_factory.AdaptiveSingleGaussianFourierSmoother(sz, spacing, params)[source]¶ Performs Gaussian smoothing via convolution in the Fourier domain. Much faster for large dimensions than spatial Gaussian smoothing on the CPU in large dimensions.

-

gaussianStd= None¶ standard deviation of Gaussian

-

gaussianStd_min= None¶ minimal allowed standard deviation during optimization

-

optimize_over_smoother_parameters= None¶ determines if we should optimize over the smoother parameters

-

associate_parameters_with_module(module)[source]¶ Associates parameters that should be optimized with the given module.

Parameters: module – module to associate the parameters to Returns: set of parameters that were associated

-

get_custom_optimizer_output_string()[source]¶ Returns a customized string describing a smoother’s setting. Will be displayed during optimization. Useful to overwrite if optimizing over smoother parameters. :return: string

-

write_parameters_to_settings()[source]¶ If called will take the current parameter state and write it back into the initial setting configuration. This should be called from the model it uses the smoother and will for example allow to write back optimized weitght parameters into the smoother :param module: :return:

-

get_optimization_parameters()[source]¶ Returns the optimizer parameters for a smoother. Returns None of there are none, or if optimization is disabled. :return: Optimizer parameters

-

set_gaussian_std(gstd)[source]¶ Set the standard deviation of the Gaussian filter

Parameters: gstd – standard deviation

-

get_gaussian_std()[source]¶ Return the standard deviation of the Gaussian filter

Returns: standard deviation of Gaussian filter

-

apply_smooth(v, vout=None, pars={}, variables_from_optimizer=None, smooth_to_compute_regularizer_energy=False, clampCFL_dt=None)[source]¶ Smooth the scalar field using Gaussian smoothing in the Fourier domain

Parameters: - v – image to smooth

- vout – if not None returns the result in this variable

- pars – dictionary that can contain various extra variables; for smoother this will for example be the current image ‘I’ or the current map ‘phi’. typically not used.

- variables_from_optimizer – variables that can be passed from the optimizer (for example iteration count)

Returns: smoothed image

-

-

class

mermaid.smoother_factory.SingleGaussianFourierSmoother(sz, spacing, params)[source]¶ Performs Gaussian smoothing via convolution in the Fourier domain. Much faster for large dimensions than spatial Gaussian smoothing on the CPU in large dimensions.

-

gaussianStd= None¶ standard deviation of Gaussian

-

gaussianStd_min= None¶ minimal allowed standard deviation during optimization

-

-

class

mermaid.smoother_factory.MultiGaussianFourierSmoother(sz, spacing, params)[source]¶ Performs multi Gaussian smoothing via convolution in the Fourier domain. Much faster for large dimensions than spatial Gaussian smoothing on the CPU in large dimensions.

-

multi_gaussian_weights= None¶ weights for the Gaussians

-

-

class

mermaid.smoother_factory.AdaptiveMultiGaussianFourierSmoother(sz, spacing, params)[source]¶ Adaptive multi-Gaussian smoother which allows optimizing over weights and standard deviations

-

multi_gaussian_stds= None¶ standard deviations of Gaussians

-

gaussianStd_min= None¶ minimal allowed standard deviation during optimization

-

omt_weight_penalty= None¶ penalty factor for the optimal mass transport term

-

omt_use_log_transformed_std= None¶ if set to true the standard deviations are log transformed for the OMT computation

-

optimize_over_smoother_stds= None¶ determines if we should optimize over the smoother standard deviations

-

optimize_over_smoother_weights= None¶ determines if we should optimize over the smoother global weights

-

nr_of_gaussians= None¶ number of Gaussians

-

multi_gaussian_weights= None¶ global weights for the Gaussians

-

gaussianWeight_min= None¶ minimal allowed weight during optimization

-

gaussian_fourier_filter_generator= None¶ creates the smoothed vector fields

-

omt_power= None¶ optimal mass transport power

-

associate_parameters_with_module(module)[source]¶ Associates parameters that should be optimized with the given module.

Parameters: module – module to associate the parameters to Returns: set of parameters that were associated

-

get_custom_optimizer_output_string()[source]¶ Returns a customized string describing a smoother’s setting. Will be displayed during optimization. Useful to overwrite if optimizing over smoother parameters. :return: string

-

get_custom_optimizer_output_values()[source]¶ Returns a customized dictionary with additional values describing a smoother’s setting. Will become part of the optimization history. Useful to overwrite if optimizing over smoother parameters.

Returns: string

-

set_state_dict(state_dict)[source]¶ If the smoother contains a torch state-dict, this function allows setting it externally (to initialize as needed). This is typically not needed as it will be set via a registration model for example, but can be useful for external testing of the smoother. This is also different from smoother parameters and only affects parameters that may be optimized over.

Parameters: state_dict – OrderedDict containing the state information Returns: n/a

-

write_parameters_to_settings()[source]¶ If called will take the current parameter state and write it back into the initial setting configuration. This should be called from the model it uses the smoother and will for example allow to write back optimized weitght parameters into the smoother :param module: :return:

-

set_gaussian_weights(gweights)[source]¶ Sets the weights for the multi-Gaussian smoother :param gweights: vector of weights :return: n/a

-

get_gaussian_weights()[source]¶ Returns the weights for the multi-Gaussian smoother :return: vector of weights

-

set_gaussian_stds(gstds)[source]¶ Set the standard deviation of the Gaussian filter

Parameters: gstd – standard deviation

-

get_gaussian_stds()[source]¶ Return the standard deviations of the Gaussian filters

Returns: standard deviation of Gaussian filter

-

get_penalty()[source]¶ Can be overwritten by a smoother to return a custom penalty which will be added to the optimization cost. For example to put penalities on smoother paramters that are being optimized over. :return: scalar value; penalty for smoother

-

apply_smooth(v, vout=None, pars={}, variables_from_optimizer=None, smooth_to_compute_regularizer_energy=False, clampCFL_dt=None)[source]¶ Smooth the scalar field using Gaussian smoothing in the Fourier domain

Parameters: - v – image to smooth

- vout – if not None returns the result in this variable

- pars – dictionary that can contain various extra variables; for smoother this will for example be the current image ‘I’ or the current map ‘phi’. typically not used.

- variables_from_optimizer – variables that can be passed from the optimizer (for example iteration count)

Returns: smoothed image

-

-

class

mermaid.smoother_factory.LearnedMultiGaussianCombinationFourierSmoother(sz, spacing, params)[source]¶ Adaptive multi-Gaussian Fourier smoother. Allows optimization over weights and standard deviations

-

multi_gaussian_stds= None¶ standard deviations of Gaussians

-

gaussianStd_min= None¶ minimal allowed standard deviation during optimization

-

smallest_gaussian_std= None¶ The smallest of the standard deviations

-

optimize_over_smoother_stds= None¶ determines if we should optimize over the smoother standard deviations

-

optimize_over_smoother_weights= None¶ determines if we should optimize over the smoother global weights

-

scale_global_parameters= None¶ If set to True the global parameters are scaled for the global parameters, to make sure energies decay similarly as for the deep-network weight estimation’

-

optimize_over_deep_network= None¶ determines if we should optimize over the smoother global weights

If set to true then the network is evaluated (should have been loaded from a previously computed optimized state), but the network weights are not being optimized over

-

freeze_parameters= None¶ Freezes parameters; this, for example, allows optimizing for a few extra steps without changing their current value

-

start_optimize_over_smoother_parameters_at_iteration= None¶ at what iteration the optimization over weights or stds should start

-

start_optimize_over_nn_smoother_parameters_at_iteration= None¶ at what iteration the optimization over nn parameters should start

-

nr_of_gaussians= None¶ number of Gaussians

-

multi_gaussian_weights= None¶ global weights for the Gaussians

-

gaussianWeight_min= None¶ minimal allowed weight during optimization

-

ws= None¶ learned mini-network to predict multi-Gaussian smoothing weights

-

global_parameter_scaling_factor= None¶ If set to True the global parameters are scaled, to make sure energies decay similarly as for the deep-network weight estimation’

-

use_multi_gaussian_regularization= None¶ If set to true then the regularization for w_K_w or sqrt_w_K_sqrt_w will use multi-Gaussian smoothing (not the velocity) of the deep smoother

-

load_dnn_parameters_from_this_file= None¶ To allow pre-initializing a network

-

omt_weight_penalty= None¶ penalty factor for the optimal mass transport term

-

omt_use_log_transformed_std= None¶ if set to true the standard deviations are log transformed for the OMT computation

-

omt_power= None¶ power for the optimal mass transport term

-

associate_parameters_with_module(module)[source]¶ Associates parameters that should be optimized with the given module.

Parameters: module – module to associate the parameters to Returns: set of parameters that were associated

-

get_custom_optimizer_output_string()[source]¶ Returns a customized string describing a smoother’s setting. Will be displayed during optimization. Useful to overwrite if optimizing over smoother parameters. :return: string

-

get_custom_optimizer_output_values()[source]¶ Returns a customized dictionary with additional values describing a smoother’s setting. Will become part of the optimization history. Useful to overwrite if optimizing over smoother parameters.

Returns: string

-

write_parameters_to_settings()[source]¶ If called will take the current parameter state and write it back into the initial setting configuration. This should be called from the model it uses the smoother and will for example allow to write back optimized weitght parameters into the smoother :param module: :return:

-

set_state_dict(state_dict)[source]¶ If the smoother contains a torch state-dict, this function allows setting it externally (to initialize as needed). This is typically not needed as it will be set via a registration model for example, but can be useful for external testing of the smoother. This is also different from smoother parameters and only affects parameters that may be optimized over.

Parameters: state_dict – OrderedDict containing the state information Returns: n/a

-

set_gaussian_weights(gweights)[source]¶ Sets the weights for the multi-Gaussian smoother :param gweights: vector of weights :return: n/a

-

get_gaussian_weights()[source]¶ Returns the weights for the multi-Gaussian smoother :return: vector of weights

-

set_gaussian_stds(gstds)[source]¶ Set the standard deviation of the Gaussian filter

Parameters: gstd – standard deviation

-

get_gaussian_stds()[source]¶ Return the standard deviations of the Gaussian filters

Returns: standard deviation of Gaussian filter

-

get_penalty()[source]¶ Can be overwritten by a smoother to return a custom penalty which will be added to the optimization cost. For example to put penalities on smoother paramters that are being optimized over. :return: scalar value; penalty for smoother

-

apply_smooth(v, vout=None, pars={}, variables_from_optimizer=None, smooth_to_compute_regularizer_energy=False, clampCFL_dt=None)[source]¶ Smooth the scalar field using Gaussian smoothing in the Fourier domain

Parameters: - v – image to smooth

- vout – if not None returns the result in this variable

- pars – dictionary that can contain various extra variables; for smoother this will for example be the current image ‘I’ or the current map ‘phi’. typically not used.

- variables_from_optimizer – variables that can be passed from the optimizer (for example iteration count)

Returns: smoothed image

-

-

class

mermaid.smoother_factory.LocalFourierSmoother(sz, spacing, params)[source]¶ Performs multi Gaussian smoothing via convolution in the Fourier domain. Much faster for large dimensions than spatial Gaussian smoothing on the CPU in large dimensions.

the local fourier smoother is designed for optimization version, in this case, only local Fourier smoother need to be used, but currently, not support global std, weights optimization.

Besides, it can be jointly used with deep smoother, in this case, we would call import_outside_var during the init to share the vars with the deep smoother.

-

get_custom_optimizer_output_string()[source]¶ Returns a customized string describing a smoother’s setting. Will be displayed during optimization. Useful to overwrite if optimizing over smoother parameters. :return: string

-

get_custom_optimizer_output_values()[source]¶ Returns a customized dictionary with additional values describing a smoother’s setting. Will become part of the optimization history. Useful to overwrite if optimizing over smoother parameters.

Returns: string

-

import_outside_var(multi_gaussian_stds, multi_gaussian_weights, gaussian_fourier_filter_generator, loss)[source]¶ This function is to deal with situation like the optimization of the multi-gaussian-stds, multi-gaussian_weight, here we also take the gaussian_fourier_filter_generator loss as the input to reduce the head cost; this function only needs to be called once at the init.

Parameters: - multi_gaussian_stds –

- multi_gaussian_weights –

- weighting_type –

- gaussian_fourier_filter_generator –

- loss –

Returns:

-

get_penalty()[source]¶ Can be overwritten by a smoother to return a custom penalty which will be added to the optimization cost. For example to put penalities on smoother paramters that are being optimized over. :return: scalar value; penalty for smoother

-

apply_smooth(v, vout=None, pars={}, variables_from_optimizer=None, smooth_to_compute_regularizer_energy=False, clampCFL_dt=None)[source]¶ Abstract method to smooth a vector field. Only this method should be overwritten in derived classes.

Parameters: - v – input field to smooth BxCxXxYxZ

- vout – if not None then result is returned in this variable

- pars – dictionary that can contain various extra variables; for smoother this will for example be the current image ‘I’ or the current map ‘phi’. typically not used.

- variables_from_optimizer – variables that can be passed from the optimizer (for example iteration count)

- smooth_to_compute_regularizer_energy – in certain cases smoothing to compute a smooth velocity field should be different than the smoothing for the regularizer (this flag allows smoother implementations reacting to this difference)

- clampCFL_dt – If specified the result of the smoother is clampled according to the CFL condition, based on the given time-step

Returns: should return the a smoothed scalar field, image dimension BxCXxYxZ

-

-

class

mermaid.smoother_factory.AvailableSmoothers[source]¶ -

smoothers= None¶ dictionary defining all the smoothers

-

-

class

mermaid.smoother_factory.SmootherFactory(sz, spacing)[source]¶ Factory to quickly create different types of smoothers.

-

spacing= None¶ spatial spacing of image

-

sz= None¶ size of image (X,Y,…); does not include batch-size or number of channels

-

dim= None¶ dimension of image

-

default_smoother_type= None¶ default smoother used for smoothing

-

get_smoothers()[source]¶ Returns all available smoothers as a dictionary which has as keys the smoother name and tuple entries of the form (smootherclass,,explanation_string) :return: the smoother dictionary

-

print_available_smoothers()[source]¶ Prints the smoothers that are available and can be created with create_smoother

-

set_default_smoother_type_to_gaussian()[source]¶ Set the default smoother type to Gaussian smoothing in the Fourier domain

-

set_default_smoother_type_to_diffusion()[source]¶ Set the default smoother type to diffusion smoothing

-

set_default_smoother_type_to_gaussianSpatial()[source]¶ Set the default smoother type to Gaussian smoothing in the spatial domain

-

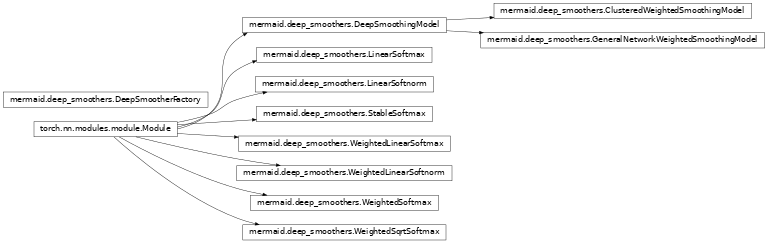

Deep smoothers¶

-

mermaid.deep_smoothers.weighted_softmax(input, dim=None, weights=None)[source]¶ Applies a softmax function.

Weighted_softmax is defined as:

\(weighted_softmax(x) = \frac{w_i exp(x_i)}{\sum_j w_j exp(x_j)}\)

It is applied to all slices along dim, and will rescale them so that the elements lie in the range (0, 1) and sum to 1.

See

WeightedSoftmaxfor more details.Parameters: - input (Variable) – input

- dim (int) – A dimension along which weighted_softmax will be computed.

-

class

mermaid.deep_smoothers.WeightedSoftmax(dim=None, weights=None)[source]¶ Applies the WeightedSoftmax function to an n-dimensional input Tensor rescaling them so that the elements of the n-dimensional output Tensor lie in the range (0,1) and sum to 1

WeightedSoftmax is defined as \(f_i(x) = \frac{w_i\exp(x_i)}{\sum_j w_j\exp(x_j)}\)

It is assumed that w_i>0 and that the weights sum up to one. The effect of this weighting is that for a zero input (x=0) the output for f_i(x) will be w_i. I.e., we can obtain a default output which is not 1/n.

- Shape:

- Input: any shape

- Output: same as input

Returns: a Tensor of the same dimension and shape as the input with values in the range [0, 1] Parameters: dim (int) – A dimension along which WeightedSoftmax will be computed (so every slice along dim will sum to 1). Examples:

>>> m = nn.WeightedSoftmax() >>> input = autograd.torch.randn(2, 3)) >>> print(input) >>> print(m(input))

-

forward(input)[source]¶ Defines the computation performed at every call.

Should be overridden by all subclasses.

Note

Although the recipe for forward pass needs to be defined within this function, one should call the

Moduleinstance afterwards instead of this since the former takes care of running the registered hooks while the latter silently ignores them.

-

mermaid.deep_smoothers.stable_softmax(input, dim=None)[source]¶ Applies a numerically stqable softmax function.

stable_softmax is defined as:

\(stable_softmax(x) = \frac{exp(x_i)}{\sum_j exp(x_j)}\)

It is applied to all slices along dim, and will rescale them so that the elements lie in the range (0, 1) and sum to 1.

See

StableSoftmaxfor more details.Parameters: - input (Variable) – input

- dim (int) – A dimension along which stable_softmax will be computed.

-

class

mermaid.deep_smoothers.StableSoftmax(dim=None)[source]¶ Applies the StableSoftmax function to an n-dimensional input Tensor rescaling them so that the elements of the n-dimensional output Tensor lie in the range (0,1) and sum to 1

StableSoftmax is defined as \(f_i(x) = \frac{exp(x_i)}{\sum_j exp(x_j)}\)

- Shape:

- Input: any shape

- Output: same as input

Returns: a Tensor of the same dimension and shape as the input with values in the range [0, 1] Parameters: dim (int) – A dimension along which WeightedSoftmax will be computed (so every slice along dim will sum to 1). Examples:

>>> m = nn.StableSoftmax() >>> input = autograd.torch.randn(2, 3)) >>> print(input) >>> print(m(input))

-

forward(input)[source]¶ Defines the computation performed at every call.

Should be overridden by all subclasses.

Note

Although the recipe for forward pass needs to be defined within this function, one should call the

Moduleinstance afterwards instead of this since the former takes care of running the registered hooks while the latter silently ignores them.

-

mermaid.deep_smoothers.weighted_linear_softmax(input, dim=None, weights=None)[source]¶ Applies a softmax function.

Weighted_linear_softmax is defined as:

\(weighted_linear_softmax(x) = \frac{clamp(x_i+w_i,0,1)}{\sum_j clamp(x_j+w_j,0,1)}\)

It is applied to all slices along dim, and will rescale them so that the elements lie in the range (0, 1) and sum to 1.

See

WeightedLinearSoftmaxfor more details.Parameters: - input (Variable) – input

- dim (int) – A dimension along which weighted_linear_softmax will be computed.

-

class

mermaid.deep_smoothers.WeightedLinearSoftmax(dim=None, weights=None)[source]¶ Applies the a WeightedLinearSoftmax function to an n-dimensional input Tensor rescaling them so that the elements of the n-dimensional output Tensor lie in the range (0,1) and sum to 1

WeightedSoftmax is defined as \(f_i(x) = \frac{clamp(x_i+w_i,0,1)}{\sum_j clamp(x_j+w_j,0,1)}\)

It is assumed that 0<=w_i<=1 and that the weights sum up to one. The effect of this weighting is that for a zero input (x=0) the output for f_i(x) will be w_i.

- Shape:

- Input: any shape

- Output: same as input

Returns: a Tensor of the same dimension and shape as the input with values in the range [0, 1] Parameters: dim (int) – A dimension along which WeightedSoftmax will be computed (so every slice along dim will sum to 1). Examples:

>>> m = nn.WeightedLinearSoftmax() >>> input = torch.randn(2, 3) >>> print(input) >>> print(m(input))

-

forward(input)[source]¶ Defines the computation performed at every call.

Should be overridden by all subclasses.

Note

Although the recipe for forward pass needs to be defined within this function, one should call the

Moduleinstance afterwards instead of this since the former takes care of running the registered hooks while the latter silently ignores them.

-

mermaid.deep_smoothers.weighted_linear_softnorm(input, dim=None, weights=None)[source]¶ Applies a weighted linear softnorm function.

Weighted_linear_softnorm is defined as:

\(weighted_linear_softnorm(x) = \frac{clamp(x_i+w_i,0,1)}{\sqrt{\sum_j clamp(x_j+w_j,0,1)**2}}\)

It is applied to all slices along dim, and will rescale them so that the elements lie in the range (0, 1) and sum to 1.

See

WeightedLinearSoftnormfor more details.Parameters: - input (Variable) – input

- dim (int) – A dimension along which weighted_linear_softnorm will be computed.

-

class

mermaid.deep_smoothers.WeightedLinearSoftnorm(dim=None, weights=None)[source]¶ Applies the a WeightedLinearSoftnorm function to an n-dimensional input Tensor rescaling them so that the elements of the n-dimensional output Tensor lie in the range (0,1) and the square sum to 1

WeightedLinearSoftnorm is defined as \(f_i(x) = \frac{clamp(x_i+w_i,0,1)}{\sqrt{\sum_j clamp(x_j+w_j,0,1)**2}}\)

It is assumed that 0<=w_i<=1 and that the weights sum up to one. The effect of this weighting is that for a zero input (x=0) the output for f_i(x) will be w_i.

- Shape:

- Input: any shape

- Output: same as input

Returns: a Tensor of the same dimension and shape as the input with values in the range [0, 1] Parameters: dim (int) – A dimension along which WeightedSoftmax will be computed (so every slice along dim will sum to 1). Examples:

>>> m = nn.WeightedLinearSoftnorm() >>> input = torch.randn(2, 3) >>> print(input) >>> print(m(input))

-

forward(input)[source]¶ Defines the computation performed at every call.

Should be overridden by all subclasses.

Note

Although the recipe for forward pass needs to be defined within this function, one should call the

Moduleinstance afterwards instead of this since the former takes care of running the registered hooks while the latter silently ignores them.

-

mermaid.deep_smoothers.linear_softnorm(input, dim=None)[source]¶ Normalizes so that the squares of the resulting values sum up to one and are positive.

linear_softnorm is defined as:

\(linear_softnorm(x) = \frac{clamp(x_i,0,1)}{\sqrt{\sum_j clamp(x_j,0,1)^2}}\)

It is applied to all slices along dim, and will rescale them so that the elements lie in the range (0, 1) and their squares sum to 1.

See

LinearSoftnormfor more details.Parameters: - input (Variable) – input

- dim (int) – A dimension along which linear_softnorm will be computed.

-

class

mermaid.deep_smoothers.LinearSoftnorm(dim=None)[source]¶ Applies the a LinearSoftnrom function to an n-dimensional input Tensor rescaling them so that the elements of the n-dimensional output Tensor lie in the range (0,1) and their square sums up to 1

LinearSoftnorm is defined as \(f_i(x) = \frac{clamp(x_i,0,1)}{\sqrt{\sum_j clamp(x_j,0,1)**2}}\)

- Shape:

- Input: any shape

- Output: same as input

Returns: a Tensor of the same dimension and shape as the input with values in the range [0, 1] Parameters: dim (int) – A dimension along which WeightedSoftmax will be computed (so every slice along dim will sum to 1). Examples:

>>> m = nn.LinearSoftnorm() >>> input = torch.randn(2, 3) >>> print(input) >>> print(m(input))

-

forward(input)[source]¶ Defines the computation performed at every call.

Should be overridden by all subclasses.

Note

Although the recipe for forward pass needs to be defined within this function, one should call the

Moduleinstance afterwards instead of this since the former takes care of running the registered hooks while the latter silently ignores them.

-

mermaid.deep_smoothers.linear_softmax(input, dim=None)[source]¶ Applies linear a softmax function.

linear_softmax is defined as:

\(linear_softmax(x) = \frac{clamp(x_i,0,1)}{\sum_j clamp(x_j,0,1)}\)

It is applied to all slices along dim, and will rescale them so that the elements lie in the range (0, 1) and sum to 1.

See

LinearSoftmaxfor more details.Parameters: - input (Variable) – input

- dim (int) – A dimension along which linear_softmax will be computed.

-

class

mermaid.deep_smoothers.LinearSoftmax(dim=None)[source]¶ Applies the a LinearSoftmax function to an n-dimensional input Tensor rescaling them so that the elements of the n-dimensional output Tensor lie in the range (0,1) and sum to 1

WeightedSoftmax is defined as \(f_i(x) = \frac{clamp(x_i,0,1)}{\sum_j clamp(x_j,0,1)}\)

- Shape:

- Input: any shape

- Output: same as input

Returns: a Tensor of the same dimension and shape as the input with values in the range [0, 1] Parameters: dim (int) – A dimension along which WeightedSoftmax will be computed (so every slice along dim will sum to 1). Examples:

>>> m = nn.LinearSoftmax() >>> input = torch.randn(2, 3) >>> print(input) >>> print(m(input))

-

forward(input)[source]¶ Defines the computation performed at every call.

Should be overridden by all subclasses.

Note

Although the recipe for forward pass needs to be defined within this function, one should call the

Moduleinstance afterwards instead of this since the former takes care of running the registered hooks while the latter silently ignores them.

-

mermaid.deep_smoothers.weighted_sqrt_softmax(input, dim=None, weights=None)[source]¶ Applies a weighted square-root softmax function.

Weighted_sqrt_softmax is defined as:

\(weighted_sqrt_softmax(x) = \frac{\sqrt{w_i} exp(x_i)}{\sqrt{\sum_j w_j (exp(x_j))^2}}\)

It is applied to all slices along dim, and will rescale them so that the elements lie in the range (0, 1) and sum to 1.

See

WeightedSoftmaxfor more details.Parameters: - input (Variable) – input

- dim (int) – A dimension along which weighted_softmax will be computed.

-

class

mermaid.deep_smoothers.WeightedSqrtSoftmax(dim=None, weights=None)[source]¶ Applies the WeightedSqrtSoftmax function to an n-dimensional input Tensor rescaling them so that the elements of the n-dimensional output Tensor lie in the range (0,1) and their squares sum to 1

WeightedSoftmax is defined as \(f_i(x) = \frac{\sqrt{w_i}\exp(x_i)}{\sqrt{\sum_j w_j\exp(x_j)^2}}\)

It is assumed that w_i>=0 and that the weights sum up to one. The effect of this weighting is that for a zero input (x=0) the output for f_i(x) will be sqrt{w_i}. I.e., we can obtain a default output which is not 1/n and if we sqaure the outputs we are back to the original weights for zero (input). This is useful behavior to implement, for example, local kernel weightings while avoiding square roots of weights that may be close to zero (and hence potential numerical issues with the gradient). The assumption is, of course, here that the weights are fixed and are not being optimized over, otherwise there would still be numerical issues. TODO: check that this is indeed working as planned.

- Shape:

- Input: any shape

- Output: same as input

Returns: a Tensor of the same dimension and shape as the input with positive values such that their squares sum up to one. Parameters: dim (int) – A dimension along which WeightedSqrtSoftmax will be computed (so every slice along dim will sum to 1). Examples:

>>> m = nn.WeightedSqrtSoftmax() >>> input = autograd.torch.randn(2, 3)) >>> print(input) >>> print(m(input))

-

forward(input)[source]¶ Defines the computation performed at every call.

Should be overridden by all subclasses.

Note

Although the recipe for forward pass needs to be defined within this function, one should call the

Moduleinstance afterwards instead of this since the former takes care of running the registered hooks while the latter silently ignores them.

-

mermaid.deep_smoothers.compute_weighted_multi_smooth_v(momentum, weights, gaussian_stds, gaussian_fourier_filter_generator)[source]¶

-

class

mermaid.deep_smoothers.DeepSmoothingModel(nr_of_gaussians, gaussian_stds, dim, spacing, im_sz, nr_of_image_channels=1, omt_power=1.0, params=None)[source]¶ Base class for mini neural network which takes as an input a set of smoothed velocity field as well as input images and predicts weights for a multi-Gaussian smoothing from this Enforces the same weighting for all the dimensions of the vector field to be smoothed

-

gaussianWeight_min= None¶ minimal allowed weight during optimization

-

local_pre_weight_max= None¶ max allowed initial preweight

-

standardize_input_images= None¶ if true then we subtract standardize_subtract_from_input_images from all network input images

-

standardize_subtract_from_input_images= None¶ Subtracts this value from all images input into a network

-

standardize_input_momentum= None¶ if true then we subtract standardize_subtract_from_input_momentum from the network input momentum

-

standardize_subtract_from_input_momentum= None¶ Subtracts this value from the momentum input to a network

-

standardize_divide_input_momentum= None¶ Value to divide the input momentum by AFTER subtraction

-

standardize_display_standardization= None¶ Outputs statistical values before and after standardization

-

computed_weights= None¶ stores the computed weights if desired

-

computed_pre_weights= None¶ stores the computed pre weights if desired

-

current_penalty= None¶ to stores the current penalty (for example OMT) after running through the model

-

deep_network_local_weight_smoothing= None¶ Smoothing of the local weight fields to assure sufficient regularity of the resulting velocity

-

deep_network_weight_smoother= None¶ The smoother that does the smoothing of the weights; needs to be initialized in the forward model

-

standardize_divide_input_images= None¶ Value to divide the input images by AFTER subtraction

-

network_penalty= None¶ penalty factor for L2 norm of network weights

-

get_number_of_input_channels(nr_of_image_channels, dim)[source]¶ legacy; to support velocity fields as input channels currently only returns the number of image channels, but if something else would be used as the network input, would need to return the total number of inputs

-

get_number_of_image_channels_from_state_dict(state_dict, dim)[source]¶ legacy; to support velocity fields as input channels

-

spatially_average(x)[source]¶ does spatial averaging of a 2D image with potentially multiple batches: format B x X x Y :param x: :return:

-

get_current_penalty()[source]¶ returns the current penalty for the weights (OMT penalty here) :return:

-

-

class

mermaid.deep_smoothers.GeneralNetworkWeightedSmoothingModel(network_type, nr_of_gaussians, gaussian_stds, dim, spacing, im_sz, nr_of_image_channels=1, omt_power=1.0, params=None)[source]¶ Mini neural network which takes as an input a set of smoothed velocity field as well as input images and predicts weights for a multi-Gaussian smoothing from this Enforces the same weighting for all the dimensions of the vector field to be smoothed

-

randomly_initialize_network= None¶ Can be set to false, to load a previously saved network

-

-

class

mermaid.deep_smoothers.ClusteredWeightedSmoothingModel(nr_of_gaussians, gaussian_stds, dim, spacing, im_sz, nr_of_image_channels=1, omt_power=1.0, params=None)[source]¶ Assumes a given clustering of an input image and estimates weights for this clustering Enforces the same weighting for all the dimensions of the vector field to be smoothed This is NOT a deep network model, but a way to debug optimization using the synthetic data

-

class

mermaid.deep_smoothers.DeepSmootherFactory(nr_of_gaussians, gaussian_stds, dim, spacing, im_sz, nr_of_image_channels=1)[source]¶ Factory to quickly create different types of deep smoothers.

-

nr_of_gaussians= None¶ number of Gaussians as input

-

gaussian_stds= None¶ stds of the Gaussians

-

dim= None¶ dimension of input image

-

im_sz= None¶ image size

-

nr_of_image_channels= None¶ number of channels the image has (currently only one is supported)

-

spacing= None¶ Spacing of the image

-